Introduction

Last Saturday, August 9, the Debian project released the latest version of the Linux distribution “Debian.” Version 13 is also known as ‘Trixie’ and, like its predecessor “Bookworm,” contains many new features and improvements. Since some credativ employees are also active members of the Debian project, the new version is naturally a special reason for us to take a look at some of its highlights.

From July 14 to 19, 2025, this year’s Debian Conference (DebConf25) is taking place in Brest, Western France, with over 450 participants – the central meeting of the global Debian community. The DebConf annually brings together developers, maintainers, contributors, and enthusiasts to collaboratively work on the free Linux distribution Debian and exchange ideas on current developments.

credativ is once again participating as a sponsor this year – and is also represented on-site by several employees.

A Week Dedicated to Free Software

DebConf25 offers a diverse program with over 130 sessions: technical presentations, discussion panels, workshops, and BoF sessions (“Birds of a Feather”) on a wide variety of topics from the Debian ecosystem. Some key topics this year:

- Progress surrounding Debian 13 “Trixie”, whose release is currently being actively worked on

- Reproducible Builds, System Integration, and Security Topics

- Debian in Cloud Environments and Container Contexts

- Improved CI/CD Infrastructure and New Tools for Maintainers

Many of the presentations will, as always, be recorded and are publicly available on video.debian.net, or can be viewed live via https://debconf25.debconf.org/.

Credativ’s Commitment – not just On-Site

As a long-standing part of the Debian community, it is natural for credativ to contribute as a sponsor to DebConf again in 2025. Furthermore, our colleagues Bastian, Martin, and Noël are on-site to exchange ideas with other developers, attend presentations or BoFs, and experience current trends in the community.

Especially for companies focused on professional open-source services, Debian remains a cornerstone – whether in data centers, in the embedded sector, or in complex infrastructure projects.

Debian Remains Relevant – both Technically and Culturally

Debian is not only one of the most stable and reliable Linux distributions but also represents a special form of community and collaboration. The open, transparent, and decentralized organization of the project remains exemplary to this day.

For us at credativ, the Debian project has always been a central element of our work – and at the same time, a community to which we actively contribute through technical contributions, package maintenance, and long-term commitment.

Thank You, DebConf Team!

Heartfelt thanks go to the DebConf25 organizing team, as well as to all helpers who made this great conference possible. Brest is a beautiful and fitting venue with fresh Atlantic air, a relaxed atmosphere, and ample space for exchange and collaboration.

Outlook for 2026

Planning for DebConf26 is already underway. We look forward to the next edition of DebConf, which will take place in Santa Fe, Argentina – and to continuing to be part of this vibrant and important community in the future.

Having a Build Infrastructure for Extended Support of End-of-Life Linux Distributions

Intro

When it comes to providing extended support for end-of-life (EOL) Linux distributions, the traditional method of building packages from source may getting certain challenges. One of the biggest challenges is the need for individual developers to install build dependencies on their own build environments. This often leads to different alternative build dependencies being installed, resulting in issues related to binary compatibility. Additionally, there are other challenges to consider, such as establishing an isolated and clean build environment in parallel different package builds, implementing an effective package validation strategy, and managing repository publishing..etc

To overcome these challenges, it is highly recommended to have a dedicated build infrastructure that enables the building of packages in a consistent and reproducible manner. With the build infrastructure, teams can address these issues effectively, and ensures that the necessary packages and dependencies are available, eliminating the need for individual developers to manage their own build environments.

OpenSUSE’s Open Build Service (OBS) offers a powerful build infrastructure that is already well known for teams working on customized or private software packages built on top of major Linux distributions, and we found it’s also useful to work on extended support for End-of-Life (EOL) Linux distributions. With support for RPM-based, Debian-based, and Arch-based Linux distributions, OBS proves to be a versatile solution capable of meeting the needs for Extended Support of End-of-Life(EOL) Linux distributions.

On June 30, 2024, two major Linux distributions, Debian 10 “Buster” and CentOS 7, are reaching their End-of-Life (EOL) status. These gained widespread adoption in numerous large enterprise environments. However, due to various factors, organizations may face challenges in completing migration processes in a timely manner, necessitating temporary extended support.

Once a distribution reaches its End-of-Life(EOL). Upstream support, including mirrors and infrastructure are typically removed. This makes a significant challenge for organizations seeking extended support, as they not only require access to a local offline mirror but also need the infrastructure to build packages in a manner consistent with the original upstream builds.

To achieve the goal of providing extended support for end-of-life (EOL) Linux distributions, we choice to have a local mirror of the EOL Linux distribution and also have a private OBS instance. To have an private OBS instance. You can download OBS code from the upstream GitHub repository and install it on your local Linux distribution, or alternatively, you can simply download the OBS Appliance Installer to integrate a private OBS instance as an build infrastructure.

By adopting a private OBS instance as the build infrastructure for extended support of EOL Linux distributions, teams can simplifies the package building process, minimize inconsistencies, and achieve reproducibility. This not only simplifies the development workflow but also enhances the overall quality and reliability of the packages being generated. Replicate the semi-original build process, ensuring that the resulting packages maintain the same quality, integrity, and compatibility as those generated upstream.

Allow us to demonstrate how OBS can be utilized for extended support of end-of-life (EOL) Linux distributions. However, please note that the following examples are purely for demonstrate purpose and may not precisely reflect the specific approach nor EOL product used in our actual project.

Config local mirror as DoD Repository

Once your private OBS instance is set up, you can login into OBS on it’s WebUI via https by ‘Admin’ with default password ‘opensuse’. You can only update some configurations with the Admin account. Including Manage Users and Groups, Add Download on Demand (DoD) Repository to establish a connection with the local mirror in Project created on private OBS instance.

Add DoD Repository in Repository tab on your private OBS instance:

Config DoD with url for internal local mirror of EOL Linux Distribution:

After you connected the local mirror with the EOL Linux Distribution in the private OBS instance. The private OBS instance became the build infrastructure, supporting various major distribution types, positions it as an ideal solution for a team seeking infrastructure to provide extended support for EOL Linux distributions.

Benefits of build infrastructure

OBS ensures consistency and reproducibility in the build process. Also provide a customizable build environment, allowing teams to tailor the environment to specific project requirements.

- Consistency and Reproducible Builds: OBS provides the option to perform builds in clean chroot or clean KVM environments, ensuring consistent and reproducible results. This reduces the risk of unexpected variations or errors during the build process.

- Customizable Build Environment: OBS allows teams to customize the build environment through project configuration, enabling them to define macros, packages, flags, and other build-related settings. This ensures compatibility with the specific requirements of the EOL Linux distribution being supported.

Customizable Build Environment.

- Isolation of Build Environments: OBS allows teams to create separate build environments for different projects or distributions, preventing interference or conflicts between builds. This enables efficient and parallel development on multiple EOL distributions simultaneously.

Each worker runs isolated with customizable build environment efficiently in parallel.

- Release Number Control: OBS offers control over the release numbers of built packages, ensuring compatibility and consistency with the original release numbering scheme. This is particularly important when working with EOL distributions.

See more details: https://en.opensuse.org/openSUSE:Build_Service_prjconf#Release

Demonstrate of other key benefits

Here are some benefits of using OBS with screenshots:

- Built-in Access Control: OBS offers flexible access control, allowing team members to have different roles and permissions for various projects or distributions. This ensures efficient collaboration and streamlined workflow management.

Manage users and groups and different roles:

- Download on Demand (DoD): OBS supports the Download on Demand feature, which fetches build dependencies on-demand during the build process. This simplifies the build workflow and reduces the need for manual intervention.

- Offline Build Support: OBS enables the creation of an offline build environment via DoD, allowing teams to build packages without an internet connection. This is particularly crucial when working with EOL distributions, where internet access and official repositories may no longer be available.

Fetching build dependencies packages from offline local mirror via DoD:

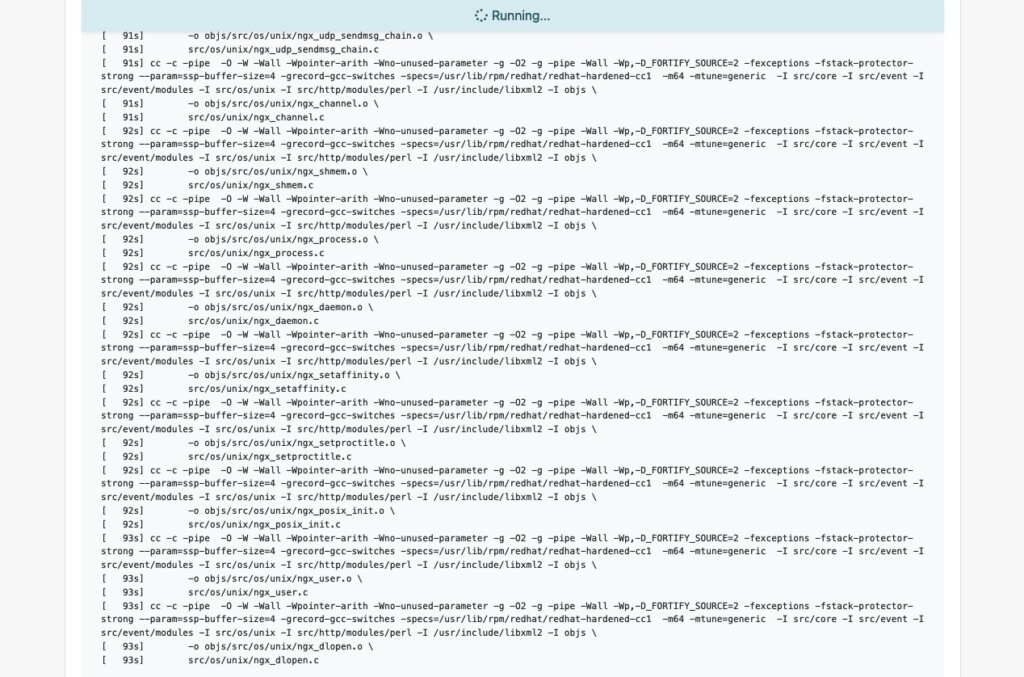

Start the build process after downloaded build dependencies from DoD:

- Flexible Project Setup: OBS allows teams to create separate projects for development, staging, and publishing purposes. This controlled workflow ensures thorough testing and validation of packages before they are submitted to the production project for publishing.

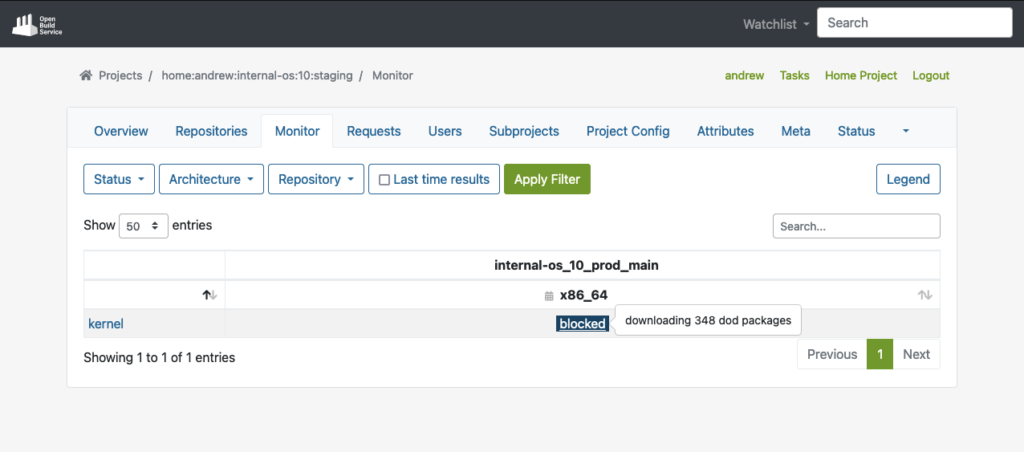

Build Result for Staging repo:

Automatically published in staging repo once package builds:

You can start the package validation process with staging repo. And then submit a package from staging to prod after it passed the validation process:

The package submit request can be reviewed by prod repo master to accept or decline:

- Repository Publish Integration: OBS seamlessly integrates with local repository publishing, ensuring that all packages for the build process are readily available and published. This eliminates the need for additional configuration on internal or external repositories.

The build process starts after package accepted into Prod repo. Here shows build status in Prod repo:

Automatically published to prod repo once package builds succeeded:

- Automated Build via Pipelines: OBS supports automated builds that seamlessly integrate into delivery pipelines. Teams can set up triggers to initiate builds automatically based on predefined criteria, reducing manual effort and ensuring timely delivery of updated packages to customers.

Package got automated builds when committed into an OBS project. Easily to integrate into a delivery pipelines.

In conclusion, OpenSUSE’s Open Build Service (OBS) provides a robust and comprehensive build infrastructure for teams working on extended support for EOL Linux distributions.

If you are interested to integrate a build infrastructure, or require extend support for any EOL Linux distribution. Please reach out to us. Our team is equipped with the expertise to assist you in integrate a private OBS instance into your exist infrastructure.

This article was originally written by Andrew Lee

Introduction

When it comes to providing extended support for end-of-life (EOL) Linux distributions, the traditional method of building packages from sources may present certain challenges. One of the biggest challenges is the need for individual developers to install build dependencies on their own build environments. This often leads to different build dependencies being installed, resulting in issues related to binary compatibility. Additionally, there are other challenges to consider, such as establishing an isolated and clean build environment for different package builds in parallel, implementing an effective package validation strategy, and managing repository publishing, etc.

To overcome these challenges, it is highly recommended to have a dedicated build infrastructure that enables the building of packages in a consistent and reproducible way. With such an infrastructure, teams can address these issues effectively, and ensures that the necessary packages and dependencies are available, eliminating the need for individual developers to manage their own build environments.

OpenSUSE’s Open Build Service (OBS) provides a widely recognized public build infrastructure that supports RPM-based, Debian-based, and Arch-based package builds. This infrastructure is well-regarded among users who require customized package builds on major Linux distributions. Additionally, OBS can be deployed as a private instance, offering a robust solution for organizations engaged in private software package builds. With its extensive feature set, OBS serves as a comprehensive build infrastructure that meets the requirements of teams to build security-patched package on top of already existing packages in order to extend the support of end-of-life (EOL) Linux distributions, for example.

Facts

On June 30, 2024, two major Linux distributions, Debian 10 “Buster” and CentOS 7, have reached their End-of-Life (EOL) status. These distributions gained widespread adoption in numerous large enterprise environments. However, due to various factors, organizations may face challenges in completing migration processes in a timely manner, requiring temporary extended support for these distributions.

When a distribution reaches its End-of-Life (EOL) support from upstream, mirrors and corresponding infrastructure are typically removed. This creates a significant challenge for organizations still using those distributions and seeking extended support, as they not only require access to a local offline mirror but also need the infrastructure to rebuild packages in such a way that is consistent with their former original upstream builds whenever there is a need to rebuild packages for security reasons.

Goal

To provide utilities that support teams building packages, OBS is able to help them. Thereby, it also helps them to have a build infrastructure that builds all existing source and binary packages along with their security patches in a semi-original build environment, further extending their support of EOL Linux distributions. This is achieved by keeping package builds at a similar level of quality and compatibility to their former original upstream builds.

Requirements

To achieve the goal mentioned above, two essential components are required:

- Local mirror of the EOL Linux distribution

- Private OBS instance.

In order to set up a private OBS instance, you can fetch OBS from the upstream GitHub repository and install as well as build it on your local machine, or alternatively, you can simply download the OBS Appliance Installer to integrate a private OBS instance as a new build infrastructure.

Keep in mind that the local mirror typically doesn’t contain the security patches to properly extend the support of the EOL distribution. Just rebuilding the existing packages won’t automatically extend the support of such distributions. Either those security patches must be maintained by the teams themselves and added to the package update by hand, or they are obtained from an upstream project that provides those patches already.

Nevertheless, once the private OBS instance is successfully installed, it can then be linked with the local offline mirror that the team wants to extend the support for, and be configured as a semi-original build environment. By doing so, the build environment can be configured as close as possible to the original upstream build environment to keep a similar level of quality and compatibility although packages might get built for security update with new patches.

This way, the already ended support of a Linux distribution can be extended because a designated team can not only build packages on their own but also provide patches for packages that are affected by bugs or security vulnerabilities.

Achievement

The OBS build infrastructure provides a semi-original build environment to simplify the package building process, reduce inconsistencies, and achieve reproducibility. By adopting this approach, teams can simplify their development and testing workflows and enhance the overall quality and reliability of the generated packages.

Demonstrates

Allow us to demonstrate how OBS can be utilized as a build infrastructure for teams in order to provide the build and publish infrastructure needed for extending the support of End-of-Life (EOL) Linux distributions. However, please note that the following examples are purely for demonstration purposes and may not precisely reflect the specific approach.

Config OBS

Once your private OBS instance is set up, you can log in to OBS by using its WebUI. You must use the ‘Admin‘ account with its default password ‘opensuse‘. After that, you can create a new project for the EOL Linux distribution as well as add users and groups for teams that are going to work on this project. In addition, you must link your project with a local mirror of the EOL Linux distribution and customize your OBS project to replicate the former upstream build environment.

OBS supports the Download on Demand (DoD) feature that can establish a connection with a local mirror on the OBS instance with the designated project within OBS to fetch build dependencies on-demand during the build process. This simplifies the build workflow and reduces the need for manual intervention.

Note: some configurations are only available with the Admin account, including Manage Users, Groups and Add Download on Demand (DoD) Repository.

Now, let’s dive into the OBS configuration of such a project.

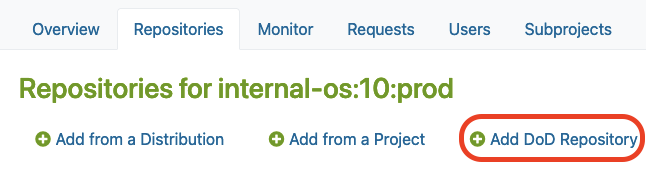

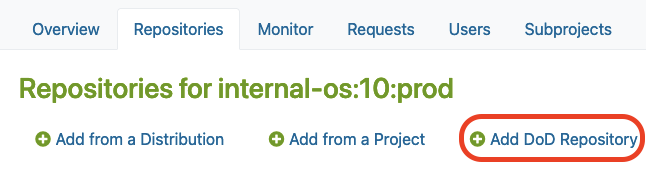

Where to Add DoD Repository

After you created a new project in OBS, you may find the “Add DoD Repository” option in the Repositories tab under the ‘Admin’ account.

There, you must click the Add DoD Repository button as shown below:

Link your local mirror via DoD

Next, you can provide a URL to link your local mirror via “Add DoD Repository“.

By doing so, you are going to configure the DoD repository. For this, you have to:

- Name the DoD Repo

- Provide a URL that points to the local mirror that is going to be used as a base for building packages in our project

The build dependencies should now be available for OBS to download automatically via the DoD feature.

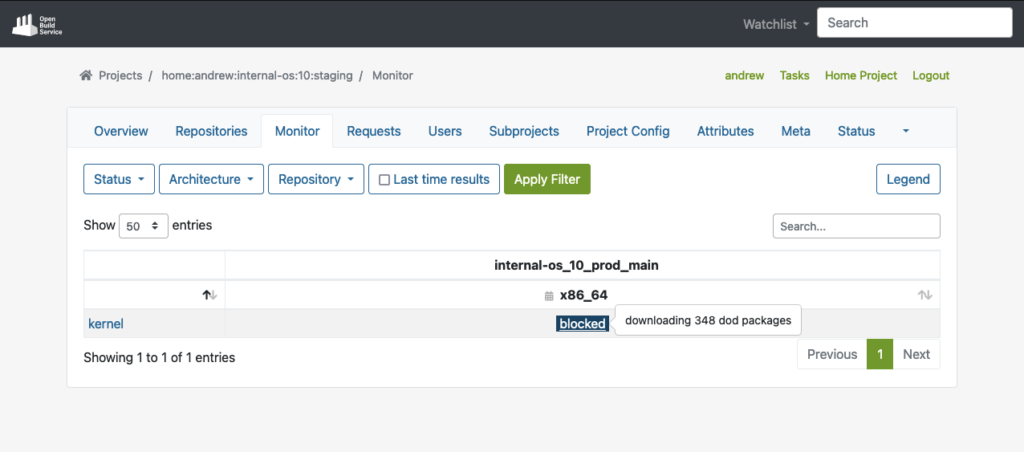

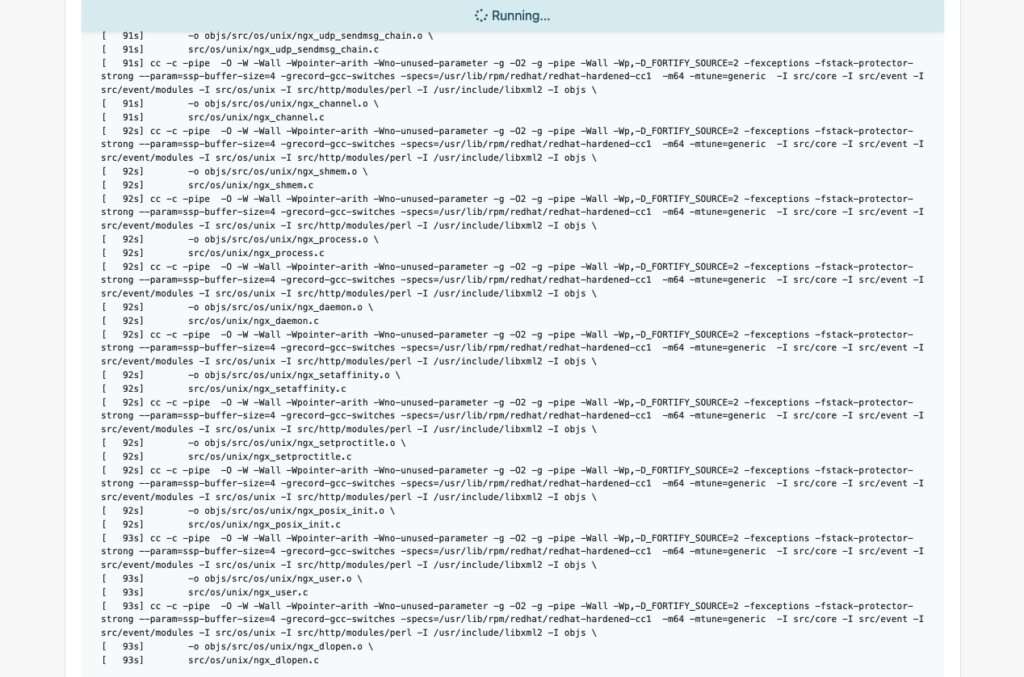

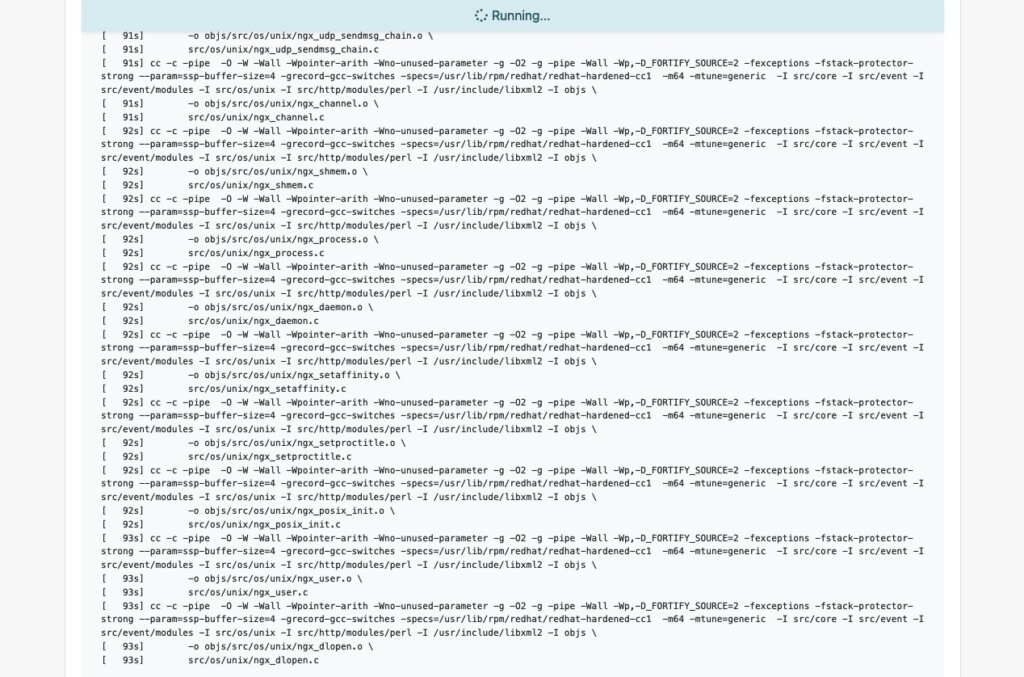

As you can see in the example below, OBS was able to fetch all the build dependencies for the shown “kernel” package via DoD before it actually started to build the package:

However, after you connected the local mirror with the EOL Linux Distribution, we should also customize the project configuration to re-create the semi-original build environment of the former upstream project in our OBS project.

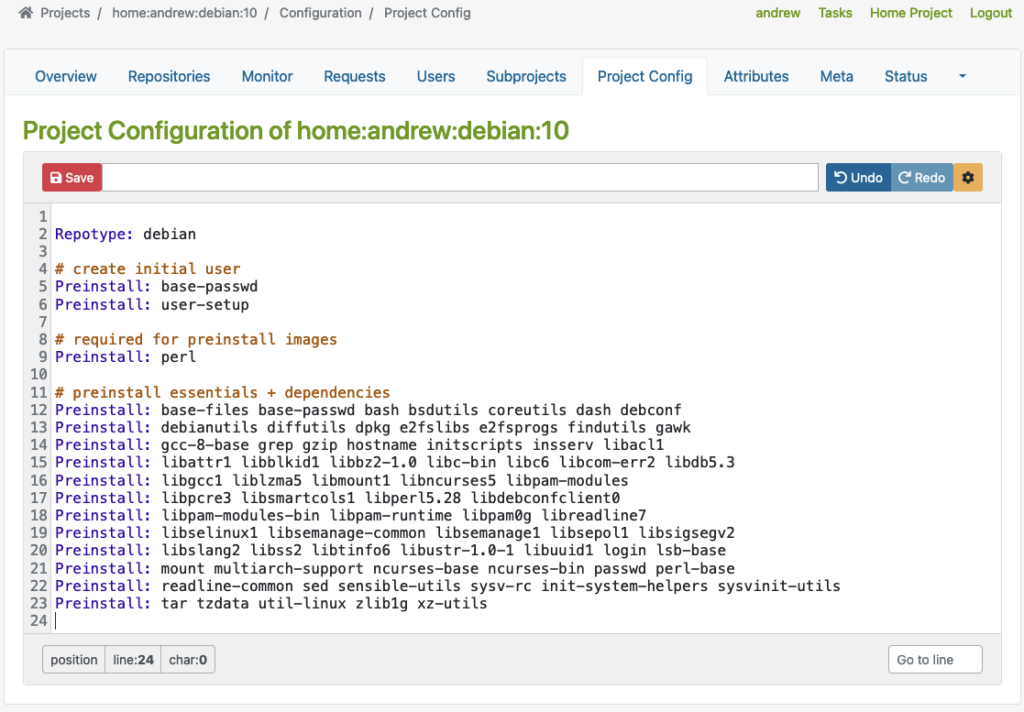

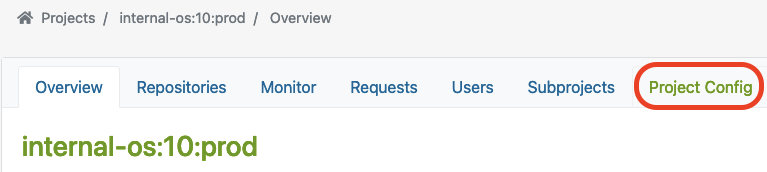

Project Config

OBS offers a customizable build environment via Project Config, allowing teams to meet specific project requirements.

It allows teams to customize the build environment through the project configuration, enabling them to define macros, packages, flags, and other build-related settings. This helps to adapt their project to all requirements needed to keep the compatibility with the original packages.

You can find the Project Config here:

Let’s have a closer look at some examples showing how the project configuration could look like for building RPM-based packages or Debian-based packages.

Depending on the actual package type that has been used by the EOL Linux distribution, the project configuration must be adjusted accordingly.

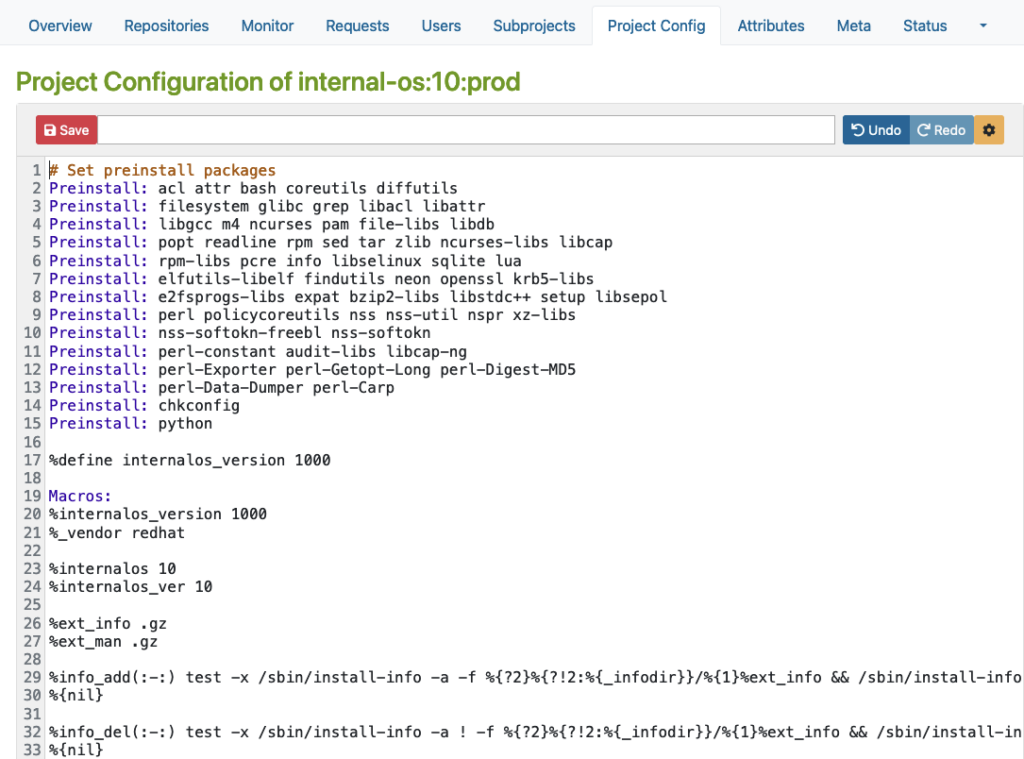

RPM-based config

Here is an example for an RPM-based distribution where you can define macros, packages, flags, and other build-related settings.

Please note that this example is only for demonstration purposes:

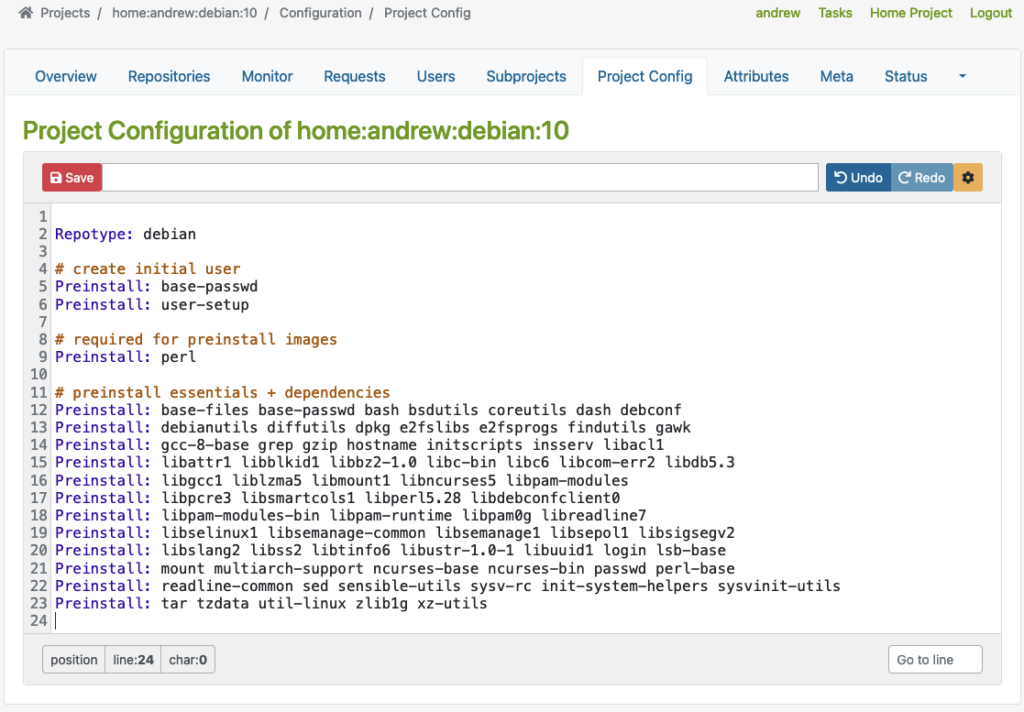

Debian based config

Then, there is another example for Debian-based distribution where you can specify Repotype: debian, define preinstalled packages, and build-related settings in the build environment.

Again, please note that this example is for demonstration purposes only:

As you can see in the examples above, the Repotype defines the repository format for the published repositories. Preinstall defines packages that should be available in the build environment while building other packages. You may find more details about the syntax in the Build Configuration chapter in the manual provided by OpenSuSE.

With this customizable build environment feature, each build starts in a clean chroot or KVM environment, providing consistency and reproducibility in the build process. Teams may have different build environments in different projects that are isolated and can be built in parallel. The separated projects together, with dedicated chroot or KVM environments for building packages prevent interference or conflicts between builds.

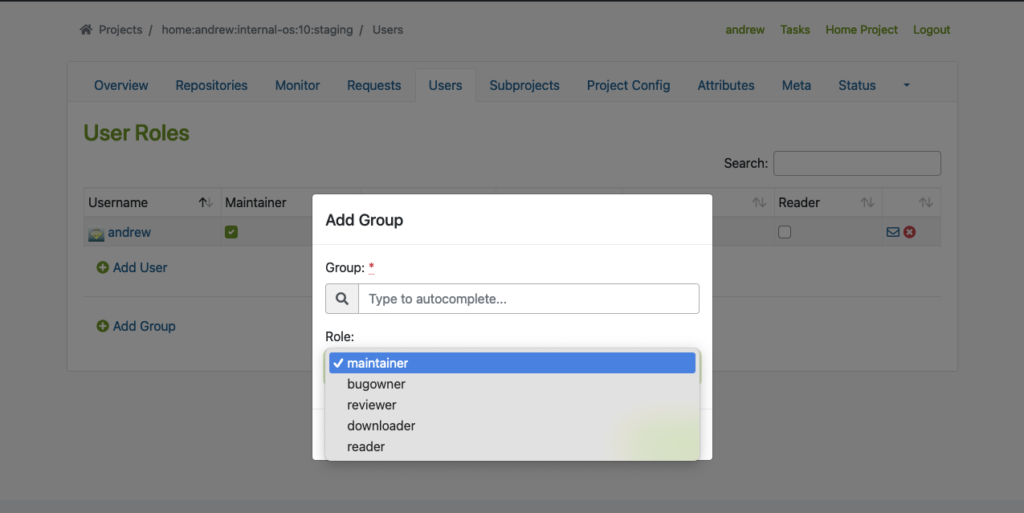

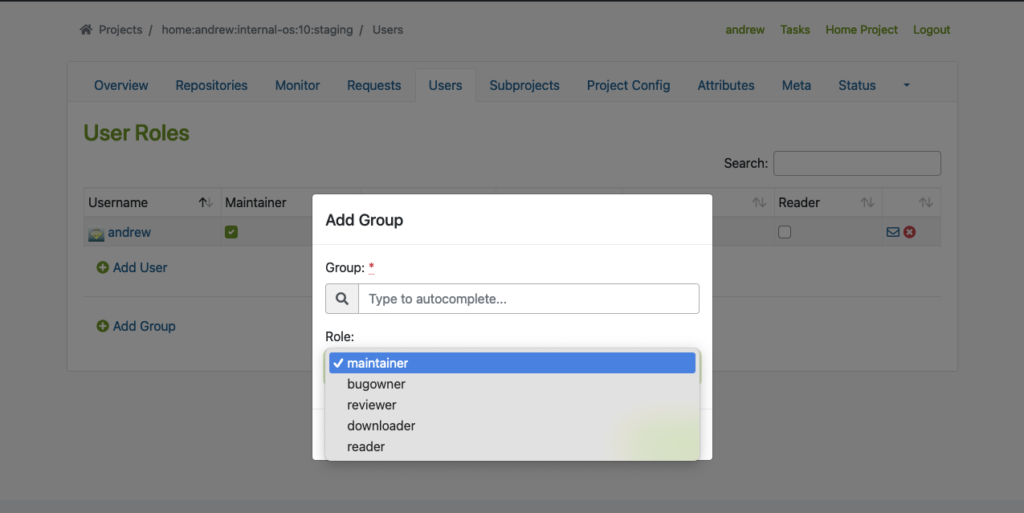

User Management

In addition to projects, OBS offers flexible access control, allowing team members to have different roles and permissions for various projects or distributions. This ensures efficient collaboration and streamlined workflow management.

To use this feature properly, we must create Users and Groups by using the Admin account in OBS. You may find the ‘Users‘ tab within the project where you can add users and groups with different roles.

The following example shows how to add users and groups in a given project:

Flexible Project Setup

OBS allows teams to create separate projects for development, staging, and publishing purposes. This controlled workflow ensures thorough testing and validation of packages before they are submitted to the production project for publishing.

Testing Strategy with Projects

Before packages are released and published, proper testing of those packages is crucial. To achieve this, OBS offers repository publish integration that helps to implement a Testing Strategy. Developers may get their packages published in a corresponding Staging repository first before starting the validation process of the packages, so that they can be submitted to the Production project. From there, they will then be published and made available to other systems.

It seamlessly integrates with local repository publishing, ensuring that all packages in the build process are readily available. This eliminates the need for additional configuration on internal or external repositories.

The next example shows a ‘home:andrew:internal-os:10:staging’ project, which is used as a staging purposed repository. There, we import the nginx source package to build and validate it afterward.

Start the Build Process

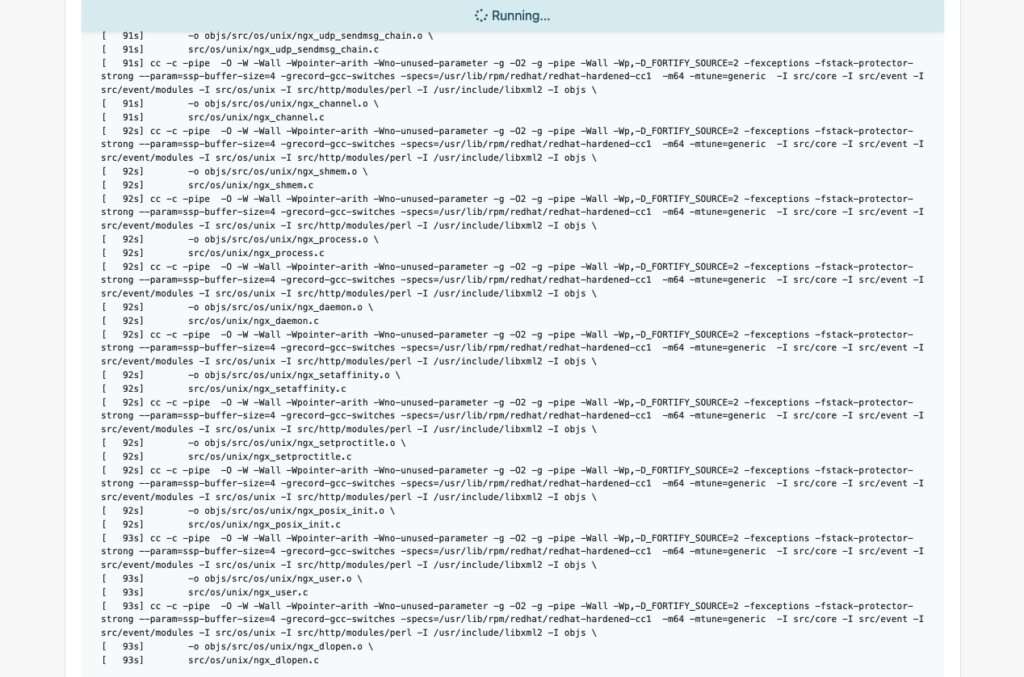

Once the build dependencies are downloaded via DoD, the build process starts automatically.

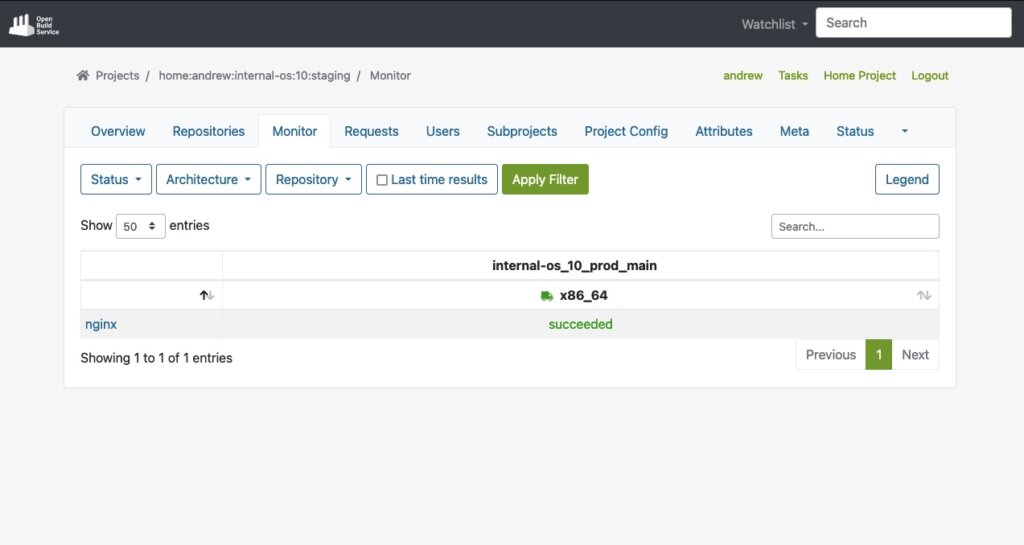

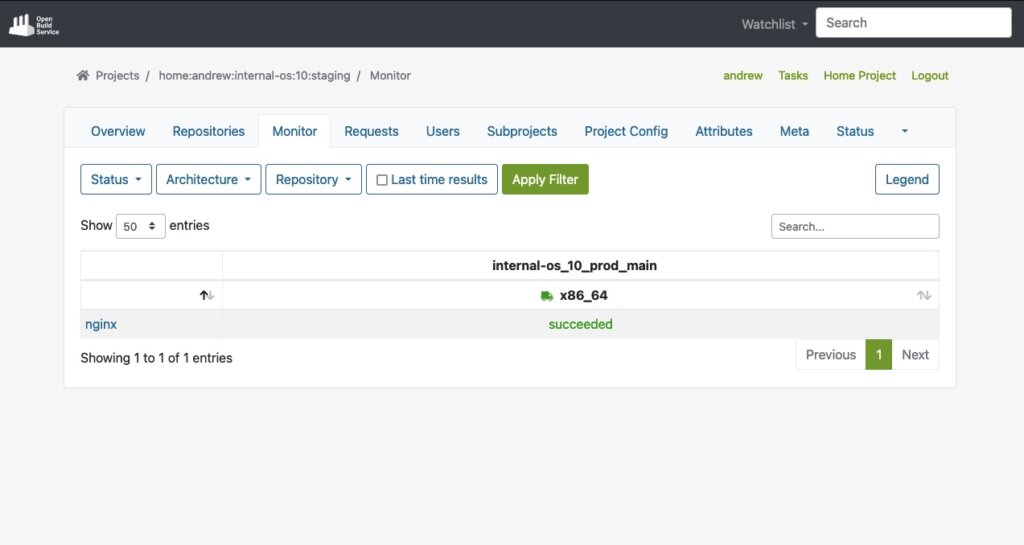

As soon as the build process for a package like ‘nginx’ succeeds, we will also see it in the interface:

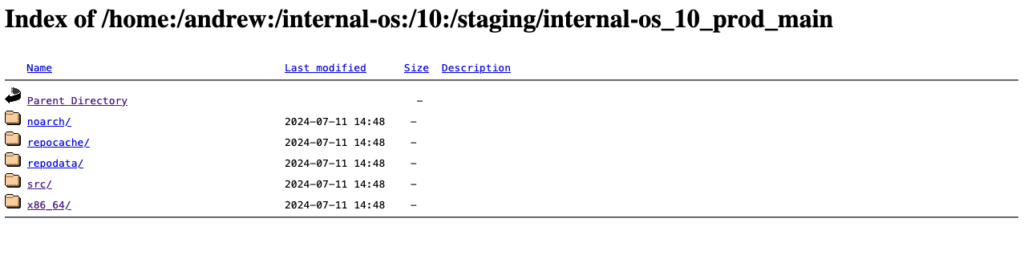

Last but not least, the package will automatically be published into a repository that is ready to be used with package managers like YUM. In our test case, the YUM-ready repository helps us to easily perform validation by conducting tests on VM or actual hardware.

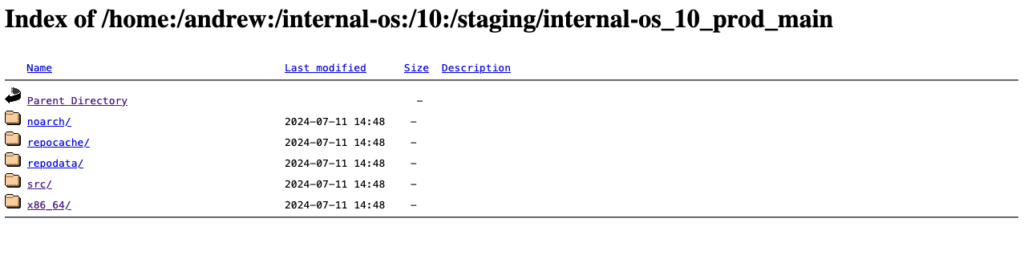

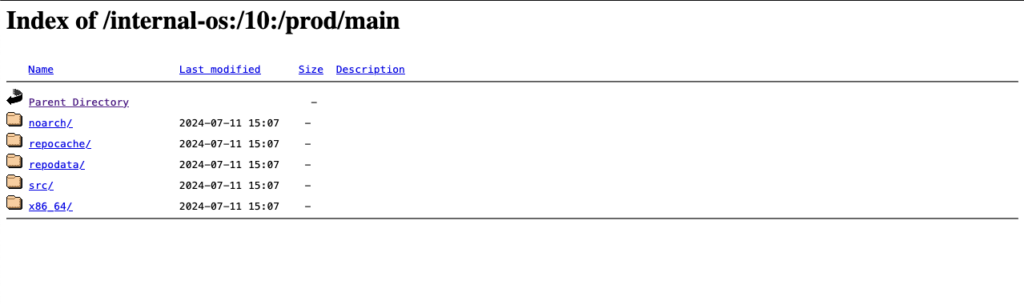

Below, you can see an example of this staging repository (Index of the YUM-ready staging repository):

Please note: To meet the testing strategy in our example, packages should only be submitted to the Prod project once they have successfully passed the validation process in the Staging repository.

Submit to Prod after Validation

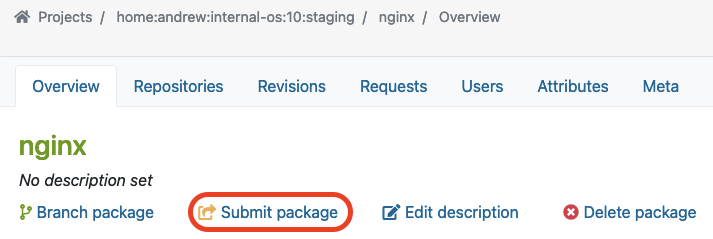

As soon as we validate the functionality of a package in the staging project and repository, we can move on by submitting the package to the production project. Underneath the package overview page (e.g., in our case, the ‘nginx’ package), you can find the Submit Package button to start this process:

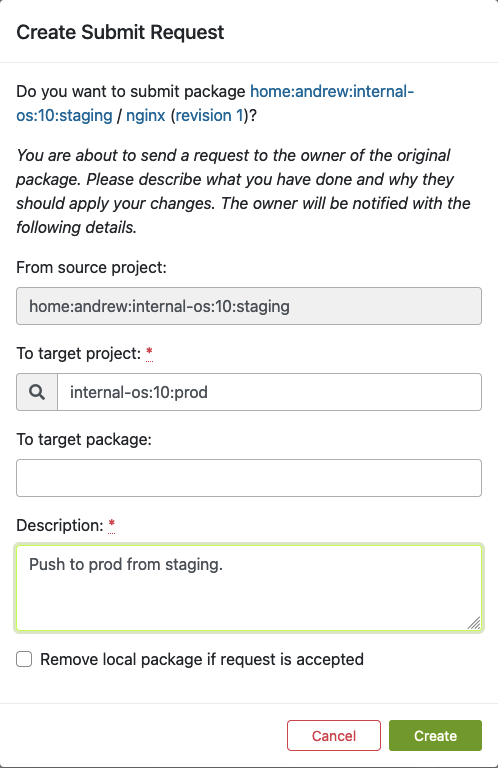

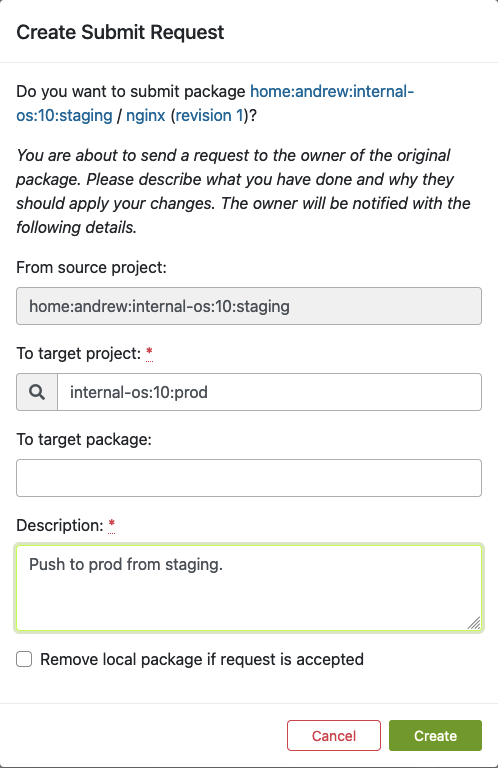

In our example, we use the ‘internal-os:10:prod’ project for the Production repository. Previously, we built and validated the ‘nginx’ package in the Staging repository. Now we want to submit this package into the Production project which we call ‘internal-os:10:prod’.

Once you click “Submit package“, the following dialog should pop up. Here, you can fill out the form to submit the newly built package to production:

Fill out this form and click ‘Create’ as soon as you are ready to submit the new package to the production project.

Find Requests

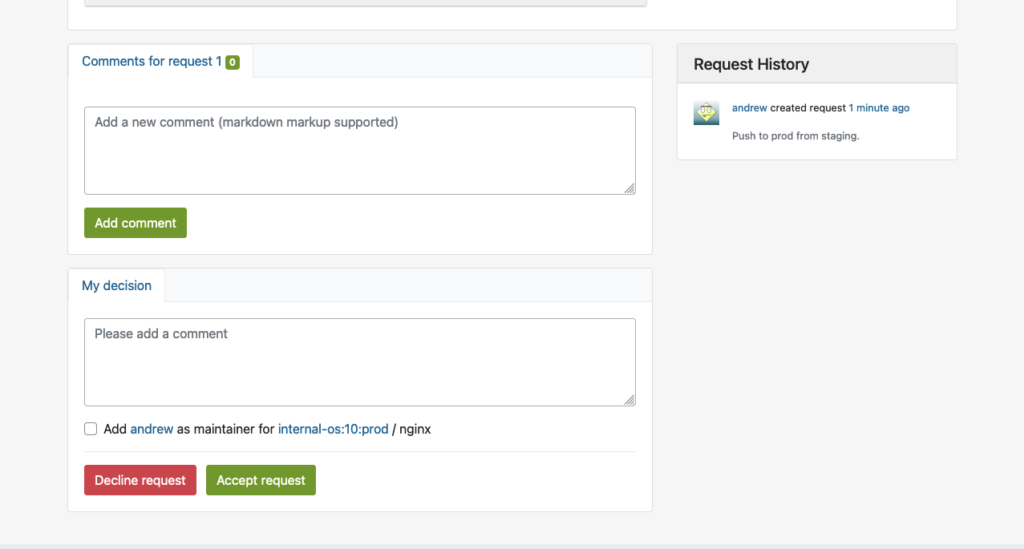

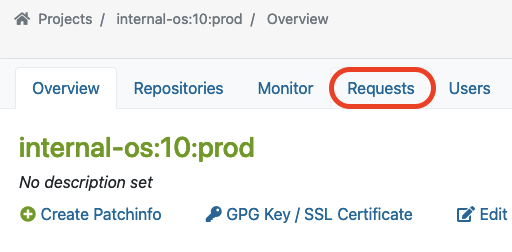

Next to submitting a package, a so-called repository master must approve the newly created requests for the designated project. This master can find a list of all requests in the Requests tab, where he/she can do the Request Review Control.

The master can find ‘Requests‘ in the project overview page like this:

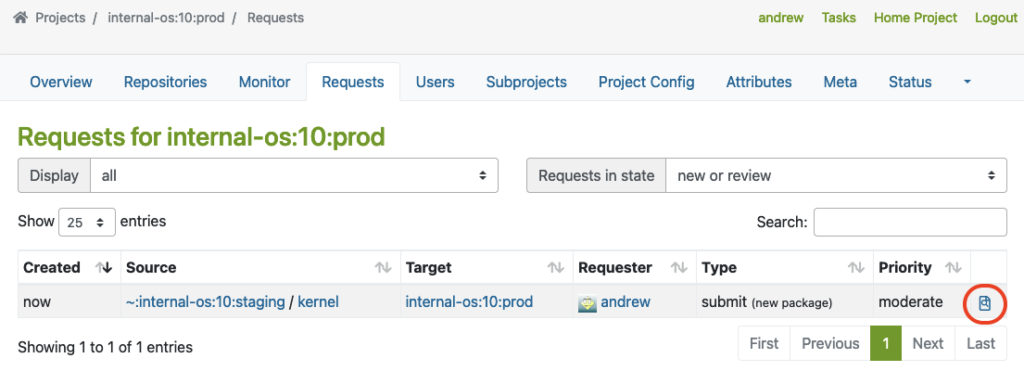

Here, you can see an example of a package that was submitted from Staging to the Prod project.

The master must click on the link marked with a red circle to perform the Request Review Control:

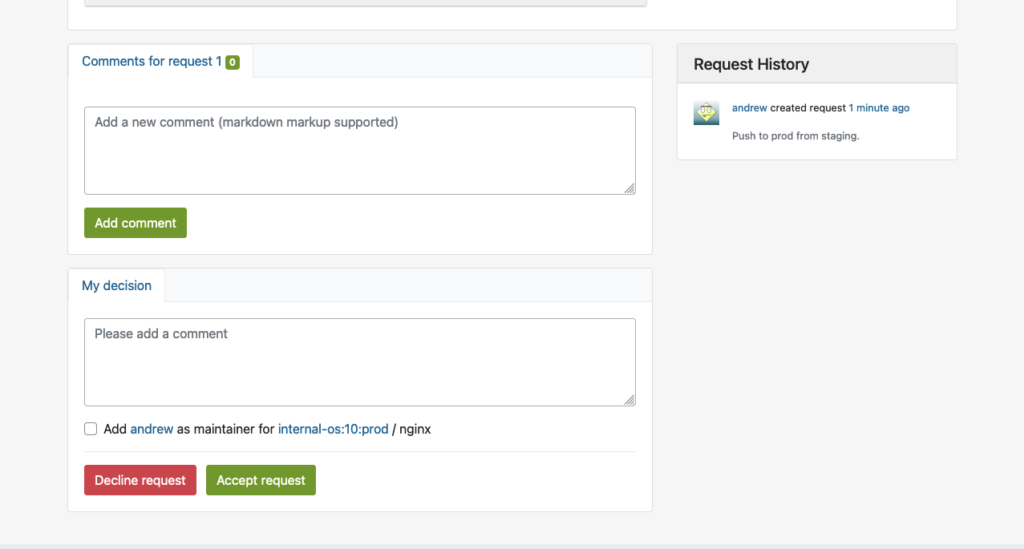

This will bring up the interface for Request Review Control, in which the repository master from the production project may decline or accept the package request:

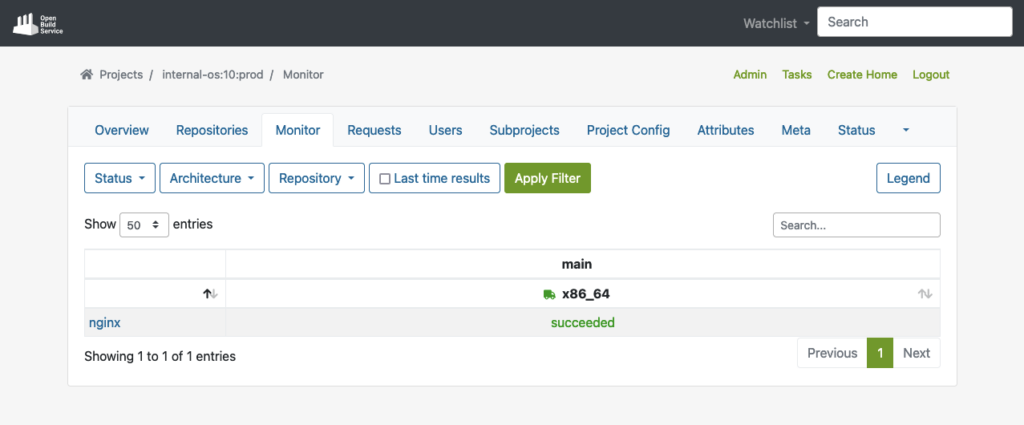

Reproduce in Prod Project

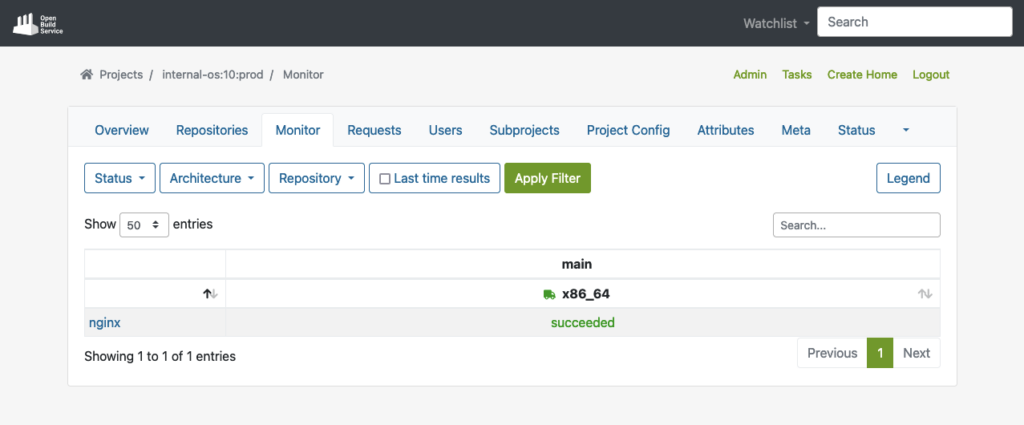

Once the package is accepted into the production project, it will automatically download all required dependencies from DoD, and reproduce the build process.

The example below shows the successfully built package in the production repo after the build process has been completed:

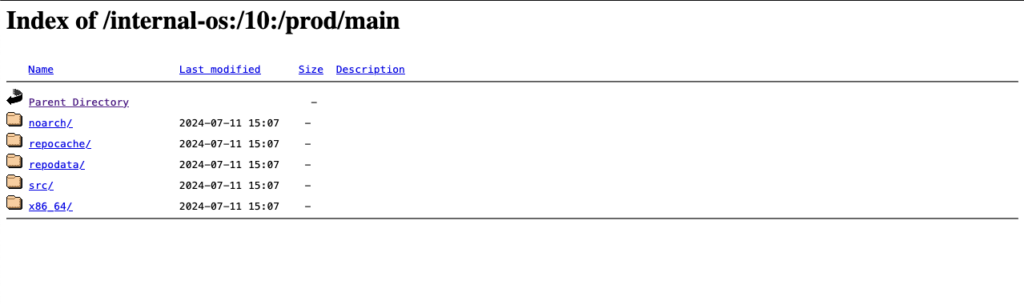

Publish to YUM-ready repository for Prod

Finally, the package will then also be published to the YUM-ready Prod repository as soon as the package has been built successfully. Here, you can see an example of the YUM-ready Prod repository that OBS handles.

(Note: you can name the project and repository name on your preferences):

Extras

In this chapter, we want to have a look at some possible extra customizations for OBS in your build infrastructures.

Automated Build Pipelines

OBS supports automated builds that may seamlessly integrate into delivery pipelines. Teams can set up CI triggers to commit new packages into OBS to initiate builds automatically based on predefined criteria, reducing manual effort and ensuring timely delivery of updated packages.

Packages get automated builds when committed into an OBS project, which enables administrators to easily integrate automated builds via pipelines:

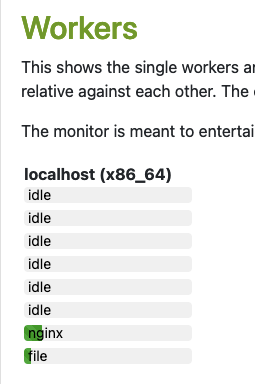

OBS Worker

Further, the Open Build Service is capable of configuring multiple workers (builders) in multiple architectures. If you want to build with other architectures, just install the ‘obs-worker’ package on another hardware with a different architecture and then simply edit the /etc/sysconfig/obs-server file.

In that file, you must set at least those parameters on the worker node:

- OBS_SRC_SERVER

- OBS_REPO_SERVERS

- OBS_WORKER_INSTANCES

This method may also be useful if you want to have more workers in the same architecture available in OBS. The OpenSUSE’s public OBS instance has a large-scale setup which is a good example of this.

Emulated Build

In Open Build Service, it is also possible to configure emulated builds for cross-architecture build capabilities via QEMU. By using this feature, almost no changes are needed in package sources. Developers can build on target architectures without the need for other hardware architectures to be available. However, you might encounter problems with bugs or missing support in QEMU, and emulation may slow down the build process significantly. For instance, see how OpenSuSE managed this on RISC-V when they didn’t have the real hardware in the initial porting stage.

Conclusion

Integrating a private OBS instance as the build infrastructure provides teams a robust and comprehensive solution to build security-patched package for extended support of end-of-life (EOL) Linux distributions. By doing so, teams can unify the package building process, reduce inconsistencies, and achieve reproducibility. This approach simplifies the development and testing workflow while enhancing the overall quality and reliability of the generated packages. Additionally, by replicating the semi-original build environment, the resulting packages builds in the similar level of quality and compatibility to upstream builds.

If you are interested in integrating a private build infrastructure, or require extended support for any EOL Linux distribution. Please reach out to us. Our team is equipped with the expertise to assist you in integrating a private OBS instance into your exist infrastructure.

This article was originally written by Andrew Lee.

DebConf 2024 from 28. July to 4. Aug 2024 https://debconf24.debconf.org/

Last week the annual Debian Community Conference DebConf happend in Busan, South Korea. Four NetApp employees (Michael, Andrew, Christop and Noël) participated the whole week at the Pukyong National University. The camp takes place before the conference, where the infrastructure is set up and the first collaborations take place. The camp is described in a separate article: https://www.credativ.de/en/blog/credativ-inside/debcamp-bootstrap-for-debconf24/

There was a heat wave with high humidity in Korea at the time but the venue and accommodation at the University are air conditioned so collaboration work, talks and BoF were possible under the circumstances.

Around 400 Debian enthusiasts from all over the world were onsite and additional people attended remotly with the video streaming and the Matrix online chat #debconf:matrix.debian.social

The content team created a schedule with different aspects of Debian; technical, social, political,….

https://debconf24.debconf.org/schedule/

There were two bigger announcements during DebConf24:

- the new distribution eLxr https://elxr.org/ based on Debian initiated by Windriver

https://debconf24.debconf.org/talks/138-a-unified-approach-for-intelligent-deployments-at-the-edge/

Two takeaway points I understood from this talk is Windriver wants to exchange CentOS and preferes a binary distribution. - The Debian package management system will get a new solver https://debconf24.debconf.org/talks/8-the-new-apt-solver/

The list of interesting talks is much longer from a full conference week. Most talks and BoF were streamed live and the recordings can be found in the video archive:

https://meetings-archive.debian.net/pub/debian-meetings/2024/DebConf24/

It is a tradtion to have a Daytrip for socializing and get a more interesting view of the city and the country. https://wiki.debian.org/DebConf/24/DayTrip/ (sorry the details of the three Daytrip are on the website for participants).

For the annual conference group photo we have to go outsite into the heat with high humidity but I hope you will not see us sweeting.

The Debian Conference 2025 will be in July in Brest, France: https://wiki.debian.org/DebConf/25/ and we will be there.:) Maybe it will be a chance for you to join us.

See also Debian News: DebConf24 closes in Busan and DebConf25 dates announced

DebConf24 https://debconf24.debconf.org/ took place from 2024-07-28 to 2024-08–04 in Busan, Korea.

Four employees (three Debian developers) from NetApp had the opportunity to participate in the annual event, which is the most important conference in the Debian world: Christoph Senkel, Andrew Lee, Michael Meskes and Noël Köthe.

DebCamp

What is DebCamp? DebCamp usually takes place a week before DebConf begins. For participants, DebCamp is a hacking session that takes place just before DebConf. It’s a week dedicated to Debian contributors focusing on their Debian-related projects, tasks, or problems without interruptions.

DebCamps are largely self-organized since it’s a time for people to work. Some prefer to work individually, while others participate in or organize sprints. Both approaches are encouraged, although it’s recommended to plan your DebCamp week in advance.

During this DebCamp, there are the following public sprints:

- Python Team Sprint: QA work on the Python Team’s packages

- l10n-pt-br Team Sprint: pt-br translation

- Security Tools Packaging Team Sprint: QA work on the pkg-security Team’s packages

- Ruby Team Sprint: Work on the transition to Ruby 3.3

- Go Team Sprint: Get newer versions of docker.io, containerd, and podman into unstable/testing

- Ftpmaster Team Sprint: discuss potential changes in ftpmaster team, workflow and communication

- DebConf24 Boot Camp: guide people new to debian with a focus on debian packaging

- LXQt Team Sprint: Workshop for new commers and work on the latest upstream release based on Qt6 and wayland support.

Scheduled workshops include:

GPG Workshop for Newcomers:

Asymmetric cryptography is a daily tool in Debian operations, used to establish trust and secure communications through email encryption, package signing, and more. This workshop participants will learn to create a PGP key and perform essential tasks such as file encryption/decryption, content signing, and sending encrypted emails. Post-creation, the key will be uploaded to public keyservers, enabling attendees to participate in our Continuous Keysigning Party.

Creating Web Galleries with Geo-Tagged Photos:

Learn how to create a web gallery with integrated maps from a geo-tagged photo collection. The session will cover the use of fgallery, openlayers, and a custom Python script, all orchestrated by a Makefile. This method, used for a South Korea gallery in 2018, will be taught hands-on, empowering others to showcase their photo collections similarly.

Introduction to Creating .deb Files (Debian Packaging):

This session will delve into the basics of Debian packaging and the Debian release cycle, including stable, unstable, and testing branches. Attendees will set up a Debian unstable system, build existing packages from source, and learn to create a Debian package from scratch. Discussions will extend online at #debconf24-bootcamp on irc.oftc.net.

In addition to the organizational part, our colleague Andrew is part of the orga team this year. He suported to arrange Cheese and Wine party and proposed an idea to organize a “Coffee Lab” where people can bring their coffee equipments and beans from their country and share each other during the conference. Andrew successfully set up the Coffee Lab in the social space with support from the “Local Team” and contributors Kitt, Clement, and Steven. They provided a diverse selection of beans and teas from countries such as Colombia, Ethiopia, India, Peru, Taiwan, Thailand, and Guatemala. Additionally, they shared various coffee-making tools, including the “Mr. Clever Dripper,” AeroPress, and AerSpeed grinder.

It also allows the DebConf committee to work together with the local team to prepare additional details for the conference. During DebCamp, the organization team typically handles the following tasks:

Setting up the Frontdesk: This involves providing conference badges (with maps and additional information) and distributing SWAG such as food vouchers, conference t-shirts, conference cups, usb-powered fan, and sponsor gifts.

Setting up the network: This includes configuring the network in conference rooms, hack labs, and video team equipment for live streaming during the event.

Accommodation arrangements: Assigning rooms for participants to check in to on-site accommodations.

Food arrangements: Catering to various dietary requirements, including regular, vegetarian, vegan, and accommodating special religious and allergy-related needs.

Setting up a spcial space: Providing a relaxed environment for participants to socialize and get to know each other.

Writing daily announcements: Keeping participants informed about ongoing activities.

Arranging childcare service.

Organizing day trip options.

Arranging parties.

In addition to the organizational part, our colleague Andrew also attended and arranged private sprints during DebCamp and contiune through DebConf via his LXQt team BoF and LXQt team newcommer private workshop. Where the team received contribution from new commers. The youngest one is only 13 years old who created his first GPG key during the GPG key workshop and attended LXQt team workshop where he managed to fix a few bugs in Debian during the workshop session.

Young kids in DebCamp

At DebCamp, two young attendees, aged 13 and 10, participated in a GPG workshop for newcomers and created their own GPG keys. The older child hastily signed another new attendee’s key without proper verification, not fully grasping that Debian’s security relies on the trustworthiness of GPG keys. This prompted a lesson from his Debian Developer father, who explained the importance of trust by comparing it to entrusting someone with the keys to one’s home. Realizing his mistake, the child considered how to rectify the situation since he had already signed and uploaded the key. He concluded that he could revoke the old key and create a new one after DebConf, which he did, securing his new GPG and SSH keys with a Yubikey.

This article was initially written by Andrew Lee.

In the world of virtualization, ensuring data redundancy and high availability is crucial. Proxmox Virtual Environment (PVE) is a powerful open-source platform for enterprise virtualization, combining KVM hypervisor and LXC containers. One of the key features that Proxmox offers is local storage replication, which helps in maintaining data integrity and availability in case of hardware failures. In this blog post, we will delve into the concept of local storage replication in Proxmox, its benefits, and how to set it up.

What is Local Storage Replication?

Local storage replication in Proxmox refers to the process of duplicating data from one local storage device to another within the same Proxmox cluster. This ensures that if one storage device fails, the data is still available on another device, thereby minimizing downtime and data loss. This is particularly useful in environments where high availability is critical.

Benefits

- Data Redundancy: By replicating data across multiple storage devices, you ensure that a copy of your data is always available, even if one device fails.

- High Availability: In the event of hardware failure, the system can quickly switch to the replicated data, ensuring minimal disruption to services.

Caveat

Please note that data loss may occur between the last synchronization of the data and the failure of the node. Otherwise use shared storage (Ceph, NFS, …) in a cluster if you can not tolerate any small data loss.

Setting Up Local Storage Replication in Proxmox

Setting up local storage replication in Proxmox involves a few steps. Here’s a step-by-step guide to help you get started:

Step 1: Prepare Your Environment

Ensure that you have a Proxmox cluster set up with at least two nodes. Each node should have local ZFS storage configured.

Step 2: Configure Storage Replication

- Access the Proxmox Web Interface: Log in to the Proxmox web interface.

- Navigate to Datacenter: In the left-hand menu, click on Datacenter.

- Select Storage: Under the Datacenter menu, click on Storage.

- Add Storage: Click on Add and select the type of storage you want to replicate.

- Configure Storage: Fill in the required details for the ZFS storage (one local storage per node).

Step 3: Set Up Replication

- Navigate to the Node: In the left-hand menu, select the node where you want to set up replication.

- Select the VM/CT: Click on the virtual machine (VM) or container (CT) you want to replicate.

- Configure Replication: Go to the Replication tab and click on Add.

- Select Target Node: Choose the target node where the data will be replicated to.

- Schedule Replication: Set the replication schedule according to your needs (e.g. every 5 minutes, hourly).

Step 4: Monitor Replication

Once replication is set up, you can monitor its status in the Replication tab. Proxmox provides detailed logs and status updates to help you ensure that replication is functioning correctly.

Best Practices for Local Storage Replication

- Regular Backups: While replication provides redundancy, it is not a substitute for regular backups. Ensure that you have a robust backup strategy in place. Use tools like the Proxmox Backup Server (PBS) for this task.

- Monitor Storage Health: Regularly check the health of your storage devices to preemptively address any issues.

- Test Failover: Periodically test the failover process to ensure that your replication setup works as expected in case of an actual failure.

- Optimize Replication Schedule: Balance the replication frequency with your performance requirements and network bandwidth to avoid unnecessary load.

Conclusion

Local storage replication in Proxmox is a powerful feature that enhances data redundancy and high availability. By following the steps outlined in this blog post, you can set up and manage local storage replication in your Proxmox environment, ensuring that your data remains safe and accessible even in the face of hardware failures. Remember to follow best practices and regularly monitor your replication setup to maintain optimal performance and reliability.

You can find further information here about the Proxmox storage replication:

https://pve.proxmox.com/wiki/Storage_Replication https://pve.proxmox.com/pve-docs/chapter-pvesr.html

Happy virtualizing!

Fundamentally, access control under Linux is a simple matter:

Files specify their access rights (execute, write, read) separately for their owner, their group, and finally, other users. Every process (whether a user’s shell or a system service) running on the system operates under a user ID and group ID, which are used for access control.

A web server running with the permissions of user www-data and group www-data can thus be granted access to its configuration file in the directory /etc, its log file under /log, and the files to be delivered under /var/www. The web server should not require more access rights for its operation.

Nevertheless, whether due to misconfiguration or a security vulnerability, it could also access and deliver files belonging to other users and groups, as long as these are readable by everyone, as is technically the case, for example, with /etc/passwd. Unfortunately, this cannot be prevented with traditional Discretionary Access Control (DAC), as used in Linux and other Unix-like systems.

However, since December 2003, the Linux kernel has offered a framework with the Linux Security Modules (LSM), which allows for the implementation of Mandatory Access Control (MAC), where rules can precisely specify which resources a process may access. AppArmor implements such a MAC and has been included in the Linux kernel since 2010. While it was originally only used in SuSE and later Ubuntu, it has also been enabled by default in Debian since Buster (2019).

AppArmor

AppArmor checks and monitors, based on a profile, which permissions a program or script has on a system. A profile typically contains the rule set for a single program. For example, it defines how (read, write) files and directories may be accessed, whether a network socket may be created, or whether and to what extent other applications may be executed. All other actions not defined in the profile are denied.

Profile Structure

The following listing (line numbers are not part of the file and serve only for orientation) shows the profile for a simple web server, whose program file is located under /usr/sbin/httpd is located.

By default, AppArmor profiles are located in the directory /etc/apparmor.d and are conventionally named after the path of the program file. The first slash is omitted, and all subsequent slashes are replaced by dots. The web server’s profile is therefore located in the file /etc/apparmor.d/usr.sbin.httpd.

1 include <tunables/global>

2

3 @{WEBROOT}=/var/www/html

4

5 profile httpd /usr/sbin/httpd {

6 include <abstractions/base>

7 include <abstractions/nameservice>

8

9 capability dac_override,

10 capability dac_read_search,

11 capability setgid,

12 capability setuid,

13

14 /usr/sbin/httpd mr,

15

16 /etc/httpd/httpd.conf r,

17 /run/httpd.pid rw,

18

19 @{WEBROOT}/** r,

20

21 /var/log/httpd/*.log w,

22 }Preamble

The instruction include in line 1 inserts the content of other files in place, similar to the C preprocessor directive of the same name. If the filename is enclosed in angle brackets, as here, the specified path refers to the folder /etc/apparmor.d; with quotation marks, the path is relative to the profile file.

Occasionally, though now outdated, the notation #include can still be found. However, since comments in AppArmor profiles begin with a # and the rest of the line is ignored, the old notation leads to a contradiction: a supposedly commented-out #include instruction would indeed be executed! Therefore, to comment out a include instruction, a space after the # is recommended.

The files in the subfolder tunables typically contain variable and alias definitions that are used by multiple profiles and are defined in only one place, according to the Don’t Repeat Yourself principle (DRY).

In line 2, the variable @{WEBROOT} is created with WEBROOT and assigned the value /var/www/html. If other profiles, in addition to the current one, were to define rules for the webroot directory, it could instead be defined in its own file tunables and included in the respective profiles.

Profile Section

The profile section begins in line 5 with the keyword profile. It is followed by the profile name, here httpd, the path to the executable file, /usr/sbin/httpd, and optionally flags that influence the profile’s behavior. The individual rules of the profile then follow, enclosed in curly braces.

As before, in lines 6 and 7, include also inserts the content of the specified file in place. In the subfolder abstractions, according to the DRY principle, there are files with rule sets that appear repeatedly in the same form, as they cover both fundamental and specific functionalities.

For example, in the file base, access to various file systems such as /dev, /proc, and /sys, as well as to runtime libraries or some system-wide configuration files, is regulated. The file

Starting with line 9, rules with the keyword capability grant a program special privileges, known as capabilities. Among these, setuid and setgid are certainly among the more well-known: they allow the program to change its own uid and gid; for example, a web server can start as root, open the privileged port 80, and then drop its root privileges. dac_override and dac_read_search allow bypassing the checking of read, write, and execute permissions. Without this capability, even a program running under uid root would not be able to access files regardless of their attributes, unlike what one is used to from the shell.

From line 14 onwards, there are rules that determine access permissions for paths (i.e., folders and files). The structure is quite simple: first, the path is specified, followed by a space and the abbreviations for the granted permissions.

Aside: Permissions

The following table provides a brief overview of the most common permissions:

| Abbreviation | Meaning | Description |

|---|---|---|

r | read | read access |

w | write | write access |

a | append | appending data |

x | execute | execute |

m | memory map executable | mapping and executing the file’s content in memory |

k | lock | setting a lock |

l | link | creating a link |

Aside: Globbing

Paths can either be fully written out individually or multiple paths can be combined into one path using wildcards. This process, called globbing, is also used by most shells today, so this notation should not cause any difficulties.

| Expression | Description |

|---|---|

/dir/file | refers to exactly one file |

/dir/* | includes all files within /dir/ |

/dir/** | includes all files within /dir/, including subdirectories |

? | represents exactly one character |

{} | Curly braces allow for alternations |

[] | Square brackets can be used for character classes |

Examples:

| Expression | Description |

|---|---|

/dir/??? | thus refers to all files in /dir whose filename is exactly 3 characters long |

/dir/*.{png,jpg} | refers to all image files in /dir whose file extension is png or jpg |

/dir/[abc]* | refers to all files in /dir whose name begins with the letters a, b, or c |

For access to the program file /usr/sbin/httpd, the web server receives the permissions mr in line 14. The abbreviation r stands for read and means that the content of the file may be read; m stands for memory map executable and allows the content of the file to be loaded into memory and executed.

Anyone who dares to look into the file

/etc/apparmor.d/abstractions/basewill see that the permissionmis also necessary for loading libraries, among other things.

During startup, the web server will attempt to read its configuration from the file /etc/httpd.conf. Since the path has r permission for reading, AppArmor will allow this. Subsequently, httpd writes its PID to the file /run/httpd.pid. The abbreviation w naturally stands for write and allows write operations on the path. (Lines 16, 17)

The web server is intended to deliver files below the WEBROOT directory. To avoid having to list all files and subdirectories individually, the wildcard ** can be used. The expression r.

As usual, all access to the web server is logged in the log files access.log and error.log in the directory /var/log/httpd/. These are only written by the web server, so it is sufficient to set only write permission for the path /var/log/httpd/* with w in line 21.

With this, the profile is complete and ready for use. In addition to those shown here, there are a variety of other rule types with which the allowed behavior of a process can be precisely defined.

Further information on profile structure can be found in the man page for apparmor.d and in the Wiki article on the AppArmor Quick Profile Language; a detailed description of all rules can be found in the AppArmor Core Policy Reference.

Creating a Profile

Some applications and packages already come with ready-made AppArmor profiles, while others still need to be adapted to specific circumstances. Still other packages do not come with any profiles at all – these must be created by the administrator themselves.

To create a new AppArmor profile for an application, a very basic profile is usually created first, and AppArmor is instructed to treat it in the so-called complain mode. Here, accesses that are not yet defined in the profile are recorded in the system’s log files.

Based on these log entries, the profile can then be refined after some time, and if no more entries appear in the logs, AppArmor can be instructed to switch the profile to enforce mode, enforce the rules listed therein, and block undefined accesses.

Even though it is easily possible to create and adapt an AppArmor profile manually in a text editor, the package apparmor-utils contains various helper programs that can facilitate the work: for example, aa-genprof helps create a new profile, aa-complain switches it to complain mode, aa-logprof helps search log files and add corresponding new rules to the profile, and aa-enforce finally switches the profile to enforce mode.

In the next article of this series, we will create our own profile for the web server nginx based on the foundations established here.

We are Happy to Support You

Whether AppArmor, Debian, or PostgreSQL: with over 22+ years of development and service experience in the open source sector, credativ GmbH can professionally support you with unparalleled and individually configurable support, fully assisting you with all questions regarding your open source infrastructure.

Do you have questions about our article or would you like credativ’s specialists to take a look at other software of your choice? Then feel free to visit us and contact us via our contact form or send us an email at info@credativ.de.

About Credativ

credativ GmbH is a vendor-independent consulting and service company based in Mönchengladbach. Since the successful merger with Instaclustr in 2021, credativ GmbH has been the European headquarters of the Instaclustr Group.

The Instaclustr Group helps companies realize their own large-scale applications thanks to managed platform solutions for open source technologies such as Apache Cassandra®, Apache Kafka®, Apache Spark™, Redis™, OpenSearch™, Apache ZooKeeper™, PostgreSQL®, and Cadence. Instaclustr combines a complete data infrastructure environment with practical expertise, support, and consulting to ensure continuous performance and optimization. By eliminating infrastructure complexity, companies are enabled to focus their internal development and operational resources on building innovative, customer-centric applications at lower costs. Instaclustr’s clients include some of the largest and most innovative Fortune 500 companies.

pg_dirtyread

I recently updated pg_dirtyread to work with PostgreSQL 14. pg_dirtyread is a PostgreSQL extension that allows reading “dead” rows from tables, i.e., rows that have already been deleted or updated. Of course, this only works if the table has not yet been cleaned by a VACUUM command or autovacuum, PostgreSQL’s garbage collection.

Here is an example of pg_dirtyread in action:

# create table foo (id int, t text); CREATE TABLE # insert into foo values (1, 'Doc1'); INSERT 0 1 # insert into foo values (2, 'Doc2'); INSERT 0 1 # insert into foo values (3, 'Doc3'); INSERT 0 1 # select * from foo; id │ t ────┼────── 1 │ Doc1 2 │ Doc2 3 │ Doc3 (3 rows) # delete from foo where id < 3; DELETE 2 # select * from foo; id │ t ────┼────── 3 │ Doc3 (1 row)

Oops! The first two documents are gone.

Let’s now use pg_dirtyread to view the table:

# create extension pg_dirtyread;

CREATE EXTENSION

# select * from pg_dirtyread('foo') t(id int, t text);

id │ t

────┼──────

1 │ Doc1

2 │ Doc2

3 │ Doc3All three documents are still present, but only one of them is visible.

pg_dirtyread can also display the system columns of PostgreSQL with information about the position and visibility of the rows. For the first two documents, xmax is set, which means that the row has been deleted:

# select * from pg_dirtyread('foo') t(ctid tid, xmin xid, xmax xid, id int, t text);

ctid │ xmin │ xmax │ id │ t

───────┼──────┼──────┼────┼──────

(0,1) │ 1577 │ 1580 │ 1 │ Doc1

(0,2) │ 1578 │ 1580 │ 2 │ Doc2

(0,3) │ 1579 │ 0 │ 3 │ Doc3

(3 rows)Undelete

Caveat: I cannot promise that any of the ideas listed below will work in practice. There are some uncertainties and a good deal of complicated knowledge about PostgreSQL internals may be required to succeed. I would ask you to consider consulting your preferred PostgreSQL service provider for advice before attempting to restore data on a production system. Do not try this at work!

I always had plans to extend pg_dirtyread with an “Undelete” command to make deleted rows reappear, but unfortunately, I never got around to trying it out in the past. However, rows can already be restored by using the output of pg_dirtyread itself:

# insert into foo select * from pg_dirtyread('foo') t(id int, t text) where id = 1;However, this is not a real “Undelete” or “undo” – it only inserts new rows from the data read from the table.

pg_surgery

Let’s welcome pg_surgery, a new PostgreSQL extension that comes with PostgreSQL 14. It contains two functions for “surgery on a broken relationship”. As a side effect, they can also make deleted tuples reappear.

As I have now discovered, one of the functions, heap_force_freeze(), works well with pg_dirtyread. It takes a list of ctids (row positions) that it marks as “frozen” but also as “not deleted”.

Let’s apply it to our test table using the ctids that pg_dirtyread can read:

# create extension pg_surgery;

CREATE EXTENSION

# select heap_force_freeze('foo', array_agg(ctid))

from pg_dirtyread('foo') t(ctid tid, xmin xid, xmax xid, id int, t text) where id = 1;

heap_force_freeze

───────────────────

(1 row)And voilà, our deleted document is back:

# select * from foo;

id │ t

────┼──────

1 │ Doc1

3 │ Doc3

(2 rows)

#select * from pg_dirtyread('foo') t(ctid tid, xmin xid, xmax xid, id int, t text);

ctid │ xmin │ xmax │ id │ t

───────┼──────┼──────┼────┼──────

(0,1) │ 2 │ 0 │ 1 │ Doc1

(0,2) │ 1578 │ 1580 │ 2 │ Doc2

(0,3) │ 1579 │ 0 │ 3 │ Doc3

(3 rows)Disclaimer

Most importantly, none of the above methods will work if the data you just deleted has already been cleaned by VACUUM or Autovacuum. This actively deletes the recovered storage space. Restore your data from the backup.

Since both pg_dirtyread and pg_surgery operate outside of the normal PostgreSQL MVCC machinery, it is easy to create corrupt data with them. This includes duplicate rows, duplicate primary key values, indexes that are not synchronized with the tables, broken foreign key constraints, and others. You have been warned.

Furthermore, pg_dirtyread does not (yet) work if the deleted rows contain any toasted values. Possible other approaches are the use of pageinspect and pg_filedump to get the ctids of the deleted rows. Please make sure you have working backups and do not need any of the above methods.

We are Happy to Support You

The necessity of the above operations can be completely prevented in most cases by professional administration or setup of the PostgreSQL infrastructure by a competent service provider. If you are currently facing one of these problems and are looking for ways out of a predicament, please contact us by email at info@credativ.de or via the contact form.

With over 22+ years of development and service experience in the PostgreSQL and Open Source area, credativ GmbH can professionally accompany you with an unprecedented and individually configurable support and fully support you in all questions regarding your Open Source infrastructure.

This article was originally written by Christoph Berg