Proxmox installation is a crucial step for companies looking to implement a professional virtualization solution. This comprehensive guide will walk you through the entire Proxmox VE installation process and help you set up a stable hypervisor environment. You will need basic Linux knowledge and approximately 2–3 hours for the complete installation and basic configuration. For this Proxmox guide, you will need a dedicated server with at least 4 GB RAM, an 8 GB USB stick, and access to the target computer’s BIOS. After completing these steps, you will have a fully functional Proxmox VE system for your server virtualization.

Why Proxmox VE is the Optimal Virtualization Solution

Proxmox Virtual Environment (VE) offers companies a cost-effective alternative to proprietary hypervisor solutions. As an open-source platform, Proxmox VE eliminates the high licensing costs associated with commercial virtualization solutions.

The integrated backup functions enable automated backup strategies without additional software. Proxmox VE supports both KVM virtualization for complete operating systems and LXC containers for resource-efficient applications.

Enterprise features such as High Availability, Live Migration, and Clustering are available by default. The web-based management interface significantly simplifies administration and allows access from any workstation.

Proxmox VE stands out due to its flexibility and direct access to the source code. Typical application areas include development environments, test labs, and productive server infrastructures, especially in medium-sized companies.

Check System Requirements and Hardware Preparation

Before installing Proxmox, verify your system’s hardware compatibility. Minimum requirements include a 64-bit processor with virtualization support (Intel VT-x or AMD-V).

Minimum Hardware Requirements

- CPU: 64-bit processor with virtualization capabilities

- RAM: 4 GB (recommended: 8 GB or more)

- Storage: 32 GB available hard disk space

- Network: Gigabit Ethernet adapter

Recommended configuration for a minimal server

- CPU: Multi-core processor with at least 4 cores

- RAM: 32 GB or more for production environments

- Storage: SSD or NVMe with at least 500 GB for better performance

- Network: Redundant network connections

Enable virtualization functions in the BIOS. Look for settings like “Intel VT-x”, “AMD-V”, or “Virtualization Technology” and set them to “Enabled”. Disable Secure Boot, as this can cause issues during installation. For virtualization clusters, additional requirements should be taken into account.

Download Proxmox VE ISO and Create Installation Medium

Download the current Proxmox VE ISO file from the official website. Visit proxmox.com and navigate to the download section.

Select the latest stable version of the Proxmox VE ISO. The file is approximately 1 GB in size and contains all necessary components for installation.

Verify the checksum of the downloaded ISO file. Use tools like sha256sum on Linux or corresponding programs on Windows. This ensures that the file is complete and unaltered.

Create Bootable USB Stick

- Connect a USB stick with at least 8 GB to your computer.

- Use tools like Rufus (Windows) or

dd(Linux) for creation. - Select the downloaded Proxmox ISO file as the source.

- Start the writing process and wait for completion.

Alternatively, you can burn the ISO file to a DVD if your target system has an optical drive. However, USB sticks enable faster installation.

Perform Proxmox Installation and Basic Configuration

Boot the target computer from the created installation medium. Configure the boot order in the BIOS so that USB or DVD takes precedence over the hard drive.

After booting, the Proxmox VE boot menu will appear. Select “Install Proxmox VE” for a standard installation.

Perform Installation Steps

- Accept the license terms by clicking “I agree”.

- Select the target hard drive for the installation.

- Configure partitioning (default settings are usually sufficient). Caution: This will erase the computer’s local hard drive.

- Enter and confirm a secure root password.

- Enter a valid email address for system notifications.

For network configuration, assign a static IP address to your Proxmox system. Avoid DHCP in production environments, as the IP address could change.

Important note: Write down the IP address and root password. You will need this information for the first access to the web interface.

The installation process takes approximately 10–15 minutes. After completion, remove the installation medium and restart the system.

Optimize First Steps After Installation

After restarting, Proxmox VE is accessible via the web interface. Open a browser and navigate to https://ihre-proxmox-ip:8006.

Log in with the username “root” and the password set during installation. Initially, ignore the browser’s SSL certificate warning.

Adjust Repository Configuration

Open the shell via the web interface and perform the following optimizations:

- Update package lists with

apt update. - Install available updates with

apt upgrade. - Configure community repositories for free updates.

- Remove the enterprise repository warning, if desired.

A basic firewall configuration should be the next step. Activate the Proxmox firewall and configure rules for SSH access and the web interface.

Create additional user accounts for daily administration. Avoid permanent use of the root account and assign specific permissions according to the area of responsibility.

Configure a valid SSL certificate for the web interface. This increases security and eliminates browser warnings for future access.

How credativ® supports Proxmox implementations

credativ® offers comprehensive Proxmox virtualization from planning to productive operation. Our experienced team will guide you through all phases of your virtualization initiative.

Our Proxmox services include:

- Professional installation consulting and hardware dimensioning for optimal performance

- 24/7 premium support with direct access to open-source specialists

- Monitoring and maintenance of your Proxmox infrastructure

- Backup strategies and disaster recovery concepts

- Training and workshops for your IT teams

- Migration services from existing virtualization platforms

We develop customized solutions that precisely match your requirements. You benefit from our many years of expertise in the open-source sector and direct collaboration with Proxmox developers.

Arrange a non-binding consultation today and find out how we can make your Proxmox implementation a success. Contact us for an individual analysis of your virtualization requirements.

The decision for credativ Proxmox Enterprise Support depends on your business requirements, the criticality of your virtualization environment, and your available internal IT resources. Enterprise Support offers professional assistance, stable updates, and advanced features, while the Community Edition remains free but without guaranteed support. This analysis will help you make the right decision for your company.

What is Proxmox Enterprise Support and how does it differ from the Community Edition?

Proxmox Enterprise Support is the paid version of the virtualization platform, offering professional support, stable updates, and access to enterprise repositories. The community repositories provide the same basic functionality for free, but without a support guarantee and with less thoroughly tested updates. Both versions are provided by Proxmox Server Solutions GmbH under the AGPL license. However, there is an important additional distinction: those who wish to save costs can gain access to the enterprise repositories by purchasing a so-called Community Subscription. This, however, only includes access and no further support.

The most significant difference lies in the support level. Enterprise customers receive direct access to the Proxmox team for technical issues, while community users rely on forums and community assistance. Enterprise Support includes various service levels, from Standard to Premium, with different response times.

The update cycles differ significantly. Enterprise repositories contain thoroughly tested, stable updates optimized for production environments. Community updates appear more frequently but are less intensively tested and can be more unstable.

Additional enterprise features include advanced backup options, cluster management tools, and specialized monitoring options. These features are not available in the Community Edition and are specifically tailored to enterprise requirements.

When is Proxmox Enterprise Support worthwhile for businesses?

Enterprise Support is particularly worthwhile for companies with critical production environments, limited internal IT resources, or strict compliance requirements. Companies with approximately 50 employees or more, or those with high availability demands, generally benefit from professional support.

A critical factor is the availability requirements of your systems. If outages cause high costs or significantly disrupt business processes, investing in Enterprise Support is justified. This applies particularly to e-commerce, financial service providers, or manufacturing operations.

Internal IT expertise plays a crucial role. If specialized virtualization experts are lacking in the team, Enterprise Support provides valuable assistance with complex problems. Smaller IT teams particularly benefit from the available expertise.

Compliance requirements may necessitate Enterprise Support. Many industries require documented support for audit purposes. Enterprise Support provides the necessary documentation and traceability for regulated environments.

What does Proxmox Enterprise Support cost and what licensing models are available?

Proxmox Enterprise Support is licensed per CPU socket with various support levels. Prices start at 120 Euros per socket per year for access to the enterprise repositories without support, while Premium Support incurs significantly higher costs but offers faster response times. (As of January 1, 2026)

The Basic or Standard package includes access to enterprise repositories, updates, and email support during business hours. This level is suitable for smaller environments without particularly high availability requirements.

Premium Support offers shorter response times and extended services. The costs are significantly higher, but for critical systems, they are well justified by minimized downtime.

Additional cost factors include the number of CPU sockets, desired response times, and special services such as on-site support or individual training. Larger deployments often receive volume discounts.

What alternatives are there to Proxmox Enterprise Support?

Alternatives to official Enterprise Support include Community Support, third-party service providers, in-house expertise development, or hybrid approaches. Each option offers different advantages and disadvantages depending on the company’s situation.

Community support via forums and documentation remains free but offers no guarantees for response times or problem resolution. Experienced IT teams can often find solutions independently, while less experienced teams may struggle.

Support from specialized third-party providers like credativ GmbH can be more cost-effective than official Enterprise Support. These providers often understand local requirements better and offer more flexible service packages. However, quality varies among providers.

Developing in-house expertise through training and certifications offers long-term independence but requires investment in personnel and time. Hybrid approaches combine internal expertise with external open-source support for particularly complex problems.

How credativ® helps with Proxmox decisions and support

credativ® supports you in the strategic decision for the appropriate Proxmox support strategy and offers comprehensive technical support for your virtualization environment. As a vendor-independent open-source specialist, we analyze your requirements and develop tailored support concepts.

Our Proxmox services include:

- Needs analysis and support strategy consulting for your virtualization environment

- 24/7 technical support with direct access to Linux and virtualization experts

- Optional: Proactive monitoring and maintenance of your Proxmox clusters

- Migration and implementation of Proxmox solutions

- Training and knowledge transfer for your IT teams

- Hybrid support models as an alternative to pure Enterprise Support

With over 25 years of experience in the open-source sector, we offer you the security of professional support without vendor lock-in. Contact us for a free consultation on your optimal Proxmox support strategy.

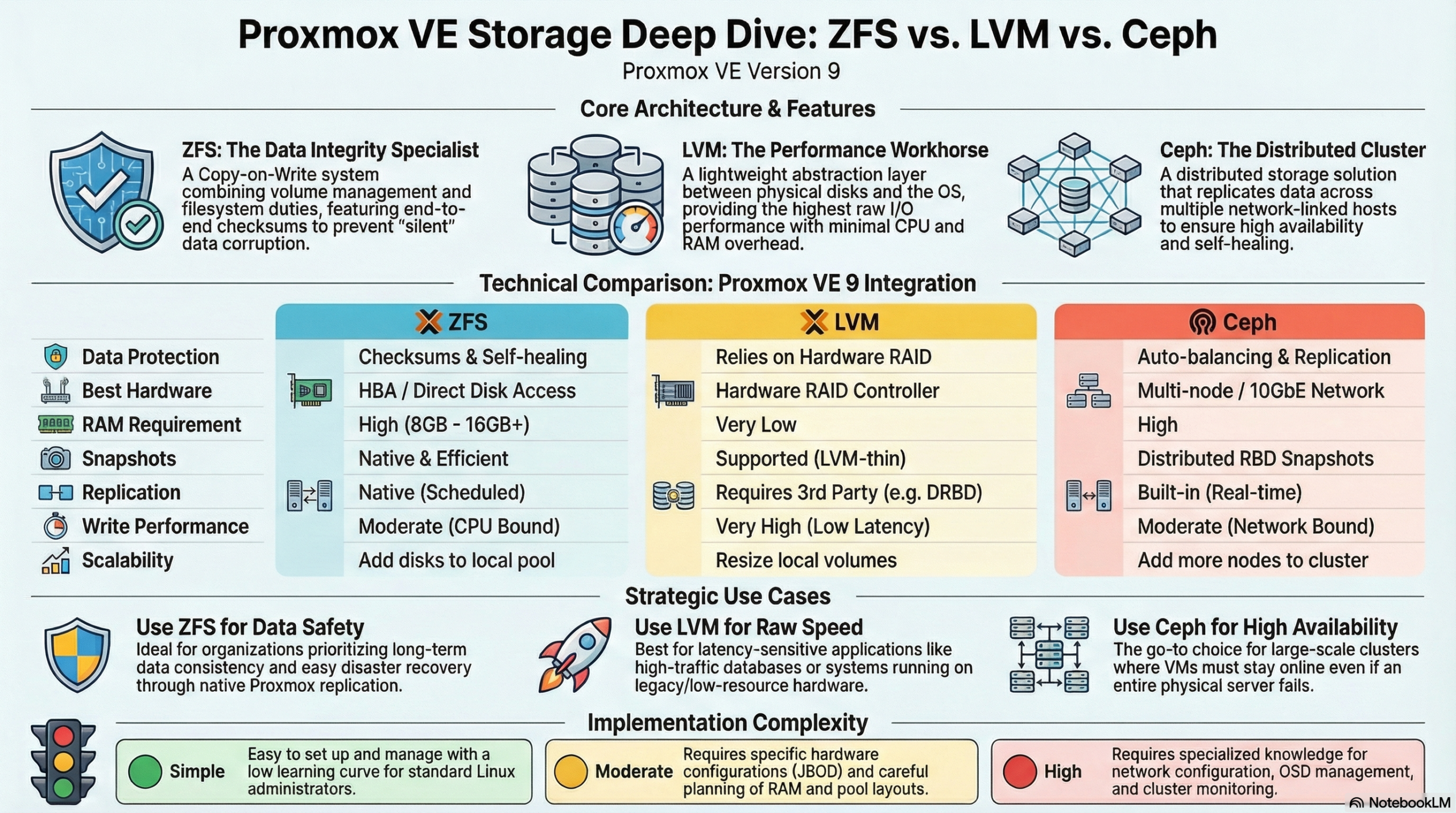

The choice between ZFS, LVM, and Ceph in Proxmox depends on your specific requirements. ZFS offers integrated data redundancy and snapshots for local systems, LVM enables flexible volume management with high performance, while Ceph provides distributed storage solutions for cluster environments. Each technology has different strengths in terms of performance, scalability, and maintenance effort.

What is the difference between ZFS, LVM, and Ceph in Proxmox?

ZFS is a copy-on-write file system with integrated volume management and data redundancy. It combines a file system and volume manager into one solution and offers features such as snapshots, compression, and automatic error correction. ZFS is particularly suitable for local storage scenarios with high data integrity requirements.

LVM (Logical Volume Manager) works as an abstraction layer between physical disks and the file system. It enables flexible partitioning and dynamic volume resizing at runtime. LVM offers high performance and easy management, but requires additional redundancy mechanisms such as software RAID.

Ceph represents a fully distributed storage architecture that replicates data across multiple nodes. It provides object, block, and file storage in a single system and scales horizontally. Ceph is suitable for large cluster environments with high availability requirements.

Which storage solution offers the best performance for different workloads?

LVM with ext4 or XFS delivers the highest performance for I/O-intensive applications such as databases. The low overhead makes it the first choice for latency-critical workloads. ZFS follows with good performance alongside data integrity features, while Ceph exhibits higher latency due to network overhead.

For database workloads, LVM with fast SSDs and direct access is recommended. The minimal abstraction layer reduces latency and maximizes IOPS. ZFS can offer competitive performance here through ARC cache and L2ARC acceleration, especially for read-heavy workloads.

File services benefit from ZFS features such as deduplication and compression, which save storage space.

Ceph is suitable for distributed file services with high availability requirements, even if performance is limited by network communication. Here, virtual machines can be migrated from host to host with virtually no delay, whether using a tool like ProxLB or in the event of a failover.

Virtual machines run well on all three systems. LVM offers the best raw performance, ZFS enables efficient VM snapshots, and Ceph provides live migration between hosts without shared storage.

| Feature | LVM | ZFS | Ceph |

|---|---|---|---|

| Architecture Type | Local (Block Storage) | Local (File System & Volume Manager) | Distributed (Object/Block/File) |

| Performance (Latency) | Excellent (Minimal Overhead) | Good (Scales with RAM/ARC) | Moderate (Network Dependent) |

| Snapshots | Yes | Yes (very efficient) | Yes |

| Data Integrity | Limited (RAID-dependent) | Excellent (Checksumming) | Excellent (Checksumming) |

| Scalability | Limited (Single Node) | Medium (within host) | Very high (Horizontal in cluster) |

| Network Requirements | Standard (1 GbE sufficient) | Standard (1 GbE sufficient) | High (min. 10-25 GbE recommended) |

| Main Application Area | Maximum single-node performance | High data security & local speed | Enterprise cluster & high availability |

| Complexity | Simple | Moderate | High |

How do you decide between local and distributed storage architecture?

Local storage solutions such as ZFS and LVM are suitable for single-host environments or when maximum performance is more important than high availability. Distributed systems like Ceph are necessary when data must be available across multiple hosts or when automatic failover mechanisms are required.

Infrastructure size plays a decisive role. Individual Proxmox hosts or small setups with two to three servers work well with local storage solutions. From three to four hosts, Ceph becomes interesting as it enables true high availability without a single point of failure. Ceph requires a quorum, so an odd number of nodes is always required for Ceph. Cluster setups with an even number are therefore always subject to some variation in usage – but manual adjustments are also required in Proxmox VE here.

Network requirements differ significantly. Local storage systems only require a standard network for management, while Ceph requires dedicated 10GbE connections for optimal performance. Today, for certain performance needs, 25GbE connections are preferred for the data load. Ideally, these connections are available exclusively to the Ceph system and are in addition to the virtualization requirements. The network infrastructure therefore significantly influences the storage decision.

Maintenance effort and complexity increase with distributed systems. ZFS and LVM are easier to understand and maintain, while Ceph requires specialized knowledge for configuration, monitoring, and troubleshooting.

Proxmox VE with ZFS offers a middle ground between true shared storage and local data storage with pe-sync. This allows hosts to be kept in sync automatically. However, this is not synchronous but occurs at specific intervals, such as every 15 minutes. For certain workloads, this can be perfectly sufficient.

What are the most important factors in Proxmox storage planning?

Hardware requirements vary greatly between storage technologies. ZFS requires sufficient RAM (1 GB per TB of storage) so that the integrated ARC cache can reach its full performance, LVM runs on minimal hardware, and Ceph requires dedicated network hardware, as well as sufficient RAM and multiple hosts. Hardware equipment often determines the available storage options.

Backup strategies must match the chosen storage solution. ZFS snapshots enable efficient incremental backups, LVM snapshots offer similar functionality, while Ceph backups are implemented via RBD snapshots or external tools. Backup requirements significantly influence the choice of storage.

Scalability planning should take future growth into account. LVM allows for easy volume expansion, ZFS pools can be expanded with additional drives, and Ceph scales by adding new hosts. The planned growth direction influences the optimal storage architecture.

Budget considerations include not only hardware costs but also maintenance effort and the required expertise. Simple LVM setups have low total costs, while Ceph clusters require higher investments in hardware and training.

Bonus: ZFS and Ceph both offer integrated checksumming procedures that actively help against so-called "bit rot" – creeping data corruption – and can automatically detect and correct it through redundancy. LVM without additional layers like a RAID layer does not allow for this.

How credativ® supports Proxmox storage optimization

credativ® offers comprehensive consulting and implementation for optimal Proxmox storage decisions based on your specific requirements. Our open-source experts analyze your workloads, infrastructure, and growth plans to recommend the ideal storage architecture.

Our services include:

- Detailed storage architecture assessment and technology selection

- Professional implementation and configuration of ZFS, LVM, or Ceph

- Performance optimization and monitoring setup for selected storage solutions

- 24/7 support and maintenance for production Proxmox environments

- Training for your IT team on storage management and best practices

With over 25 years of experience in the open-source sector and direct access to our permanent Linux specialists, you receive professional Proxmox support without going through call centers. Contact us for a personalized consultation on your Proxmox storage strategy and benefit from our proven enterprise support.

CERN PGDay 2026 took place on Friday, February 6, 2026, at CERN campus in Geneva, Switzerland. This was the second annual PostgreSQL Day at CERN, co-organized by CERN and the Swiss PostgreSQL Users Group. The conference offered a single-track schedule of seven sessions (all in English), followed by an on-site social event for further networking in the inspiring environment of CERN. With around 100 participants, this is already a very large PostgreSQL event for Switzerland. But what made this event absolutely special was its location. Hosting a database conference at CERN – one of the world’s leading science laboratories – provided a unique experience for everyone.

Introduction and Project History

Nowadays, cloud storage is included with every second account for various services. Whether it is a Google account with Drive or a Dropbox account created to collaborate with other project participants. Unfortunately, these are unencrypted, and it is often unclearly communicated how the service provider handles the stored data and what it might be used for. This means, however, that it cannot be used securely.

Cryptomator is an open-source encryption tool developed in 2015 by the German company Skymatic GmbH to enable the secure storage of data in cloud storage solutions. The project arose from the realization that while many cloud providers offer convenience, they do not guarantee sufficient control over the confidentiality of stored data. It addresses this problem through client-side, transparent encryption that works independently of the respective provider. This is implemented by creating encrypted vaults (“Vaults”) in any cloud storage, with all encryption operations performed locally on the end device. As a result, control over the data remains entirely with the user.

Since its release, Cryptomator has evolved into one of the most well-known open-source solutions for cloud encryption. In 2016, the project was even honored with the CeBIT Innovation Award in the “Usable Security and Privacy” category. Development continues actively, with a focus on stability, compatibility, and cryptographic robustness.

Licensing

The project itself is available under a dual licensing structure. The desktop version is licensed as an open-source project under the GNU General Public License v3.0 (GPLv3). This means that the source code is freely viewable, modifiable, and redistributable, as long as the terms of the license are met. The source code is publicly accessible on GitHub: https://github.com/cryptomator/cryptomator.

For companies wishing to integrate Cryptomator into commercial products (e.g., as a white-label solution), the manufacturer offers a proprietary licensing option. The desktop software remains free to use, while development is primarily funded through donations and revenue from the mobile apps.

The mobile applications for Android and iOS are available in their respective app stores. While the basic functions are free, full functionality requires in-app purchases which, as mentioned, fund the project.

Architecture and Functionality

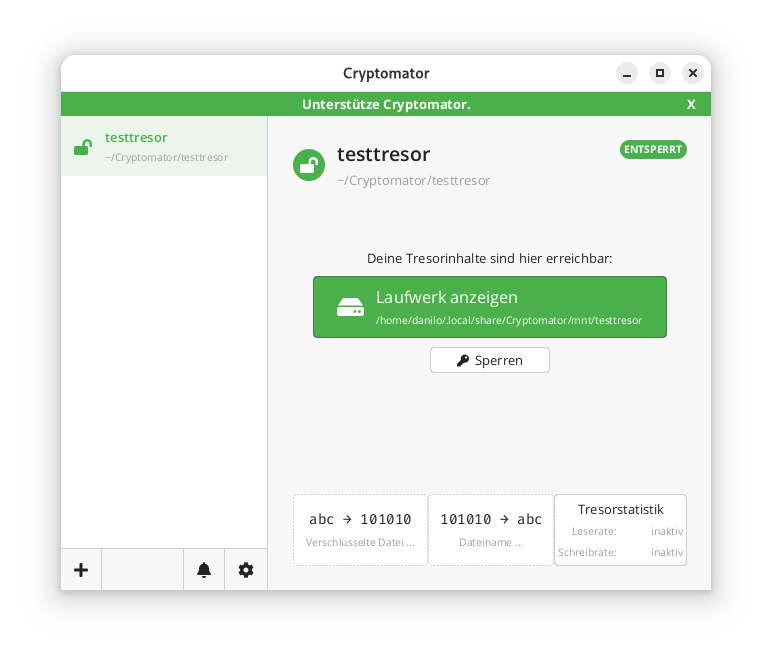

Cryptomator is based on the principle of transparent, client-side encryption. It creates encrypted directories—so-called vaults—within any folder managed by a cloud synchronization service. The software mounts these vaults as a virtual drive, making them behave like a normal folder in the file system for the user. This also works in local folders and does not necessarily have to be located in a synchronized folder.

All file operations (reading, writing, renaming) are transparently intercepted and encrypted or decrypted by Cryptomator before they are stored on the hard drive and/or synchronized to the cloud. The actual synchronization is handled by the respective cloud client—Cryptomator itself does not perform any network communication.

Cryptomator generates many small files from the data (e.g., dXXX for data blocks). It is important that the cloud client synchronizes these reliably to avoid data corruption. However, this also ensures that no conclusions can be drawn from the number and size of the files.

The architecture is designed so that no central servers are required. There is no registration, no accounts, and no transmission of metadata. The system operates strictly according to the zero-knowledge principle: only the user possesses the key for decryption.

However, this also means that if the “vault key” is lost, there is no longer any way to access the data. There is, however, the option to have an additional recovery key created when a vault is set up. This should then be kept in a suitably secure location, such as in a KeePass database or another password manager of your choice.

Since the file masterkey.cryptomator is also critical for decryption, it must be ensured that it is not deleted. In case of doubt, it can simply be backed up externally, just like the password and the recovery key.

If a vault is unlocked, it can be selected directly via the GUI client and opened in the file manager using a button.

In the locally mounted and decrypted vault, currently only the initial file can be found:

$ ls -l ~/.local/share/Cryptomator/mnt/testtresor

total 0

-rw-rw-r-- 1 danilo danilo 471 10. Feb 11:07 WELCOME.rtf

In the actual file system where the vault was created in encrypted form, it looks like this instead:

$ tree ~/Cryptomator/testtresor/

/home/danilo/Cryptomator/testtresor/

├── c

├── d

│ └── KY

│ └── FEV7TA6N4UV5I3PFT6P7D7DCTNGLDU

│ ├── dirid.c9r

│ └── feotyFJDU3AD_3wyOp7Tbd83QUgUGcB46vYT.c9r

├── IMPORTANT.rtf

├── masterkey.cryptomator

├── masterkey.cryptomator.57A62350.bkup

├── vault.cryptomator

└── vault.cryptomator.2BB16E73.bkup

5 directories, 7 files

The IMPORTANT.rtf file created here only contains a note indicating that it is a Cryptomator vault and a link to the documentation.

Cryptographic Procedures

Cryptomator’s security is based on established, standard-compliant cryptographic procedures:

– Encryption of file contents: AES-256 in SIV-CTR-MAC or GCM mode, depending on the version. This ensures both confidentiality and integrity.

– Encryption of file and folder names: AES-SIV to enable deterministic encryption without opening security vulnerabilities.

– Password derivation: The master key is derived from the user password using **scrypt**, a memory-intensive key derivation algorithm that makes brute-force attacks more difficult.

– Key management: Each vault has a 256-bit encryption and MAC master key. This is encrypted with the Key Encryption Key (KEK) derived from the password and stored in the `masterkey.cryptomator` file.

Further details on the cryptographic architecture are described in the official documentation.

Integration of Third-Party Services

Cryptomator distinguishes between desktop and mobile clients regarding cloud integration.

On desktop systems (Windows, macOS, Linux), integration works via the local synchronization folder of the respective cloud provider. Since Cryptomator only encrypts a folder and does not perform direct network communication, compatibility is very high. Any provider that provides a local folder (e.g., Dropbox, Google Drive, OneDrive, Nextcloud) can be used. Aside from this, an official [CLI client](https://github.com/cryptomator/cli) is also available. However, this appears to be less actively developed than the GUI client; at least the last commit and release are already more than six months old.

On mobile devices (Android, iOS), integration takes place either via native APIs or via the WebDAV protocol. Supported providers such as Dropbox, Google Drive, or OneDrive are offered directly in the app. For other services that support WebDAV (e.g., Nextcloud, ownCloud, MagentaCLOUD, GMX, WEB.DE), a connection can be established manually via the WebDAV interface.

A current list of supported services is available here.

Important Notes on Integration

– WebDAV and Two-Factor Authentication (2FA): For providers with 2FA, an app-specific password is often required, as the main password cannot be used for WebDAV.

– pCloud: WebDAV is disabled when 2FA is activated, which makes use via Cryptomator impossible.

– Multi-Vault Support: Multiple vaults can also be managed in parallel in the client.

External Security Validation of the Project

In 2017, Cryptomator underwent a comprehensive security audit by the recognized security company Cure53. The test covered the project’s core cryptographic libraries, including cryptolib, cryptofs, siv-mode, and cryptomator-objc-cryptor. The audit was predominantly positive: the architecture was rated as robust and the attack surface as very small.

One critical finding concerned unintentional public access to the private GPG signing key, which has since been resolved. Another less critical note referred to the use of AES/ECB as the default mode in an internal class, which, however, was not used in the main encryption path.

The full audit report in PDF format is publicly available.

This is the only publicly available audit of the project that the author could find. However, since audits are generally expensive and time-consuming, the project should be credited for having it conducted and made publicly available.

All other known security issues are also listed on the project’s GitHub page and are visible to everyone.

Conclusion

Cryptomator represents a technically sophisticated, transparent, and cross-platform solution for encrypting data in cloud storage or local data. Through the consistent implementation of the zero-knowledge principle and the use of established cryptographic procedures, it offers high security combined with good user-friendliness.

The separation between encryption and synchronization enables broad compatibility with existing cloud services without them having to change their infrastructure. The open-source nature of the software allows for audits and fosters trust in its security.

Even for less technically savvy users who want to maintain control over their data, Cryptomator is one of the best options available thanks to the GUI client. The specific notes regarding the peculiarities of mobile integration and the fact that both the master key and the password must be strictly protected are, of course, still essential.

Update: Proxday will now take place on October 15, 2026. We have moved the date to avoid a clash with the Dutch Proxmox Day of our friends at Tuxis. All other information remains current – the CfP deadline is extended accordingly to July 31, 2026.

Save the Date: On October 15, 2026, we are bringing the Proxmox world together in Mönchengladbach. With Proxday 2026, we are creating an event that goes far beyond our previous formats. While our Virtualization Gathering and Business Breakfast have already provided valuable impetus, it is now time for the ‘Next Level’. We are dedicating a full day to Proxmox VE and finally giving the community the space it deserves – for deep dives, exchange of experiences, and technical innovations.

Proxday is organized by credativ GmbH and is intended as a meeting point by the community for the community. The focus here is on practical exchange of experience, in-depth technical knowledge, and networking among experts. To ensure the program reflects the full spectrum of the Proxmox world, we invite you to actively contribute to shaping the day.

Call for Papers: Share Your Expertise!

The Call for Papers (CfP) is now officially open. We are looking for exciting presentations, technical deep dives, and practical experience reports. Whether you have built a complex infrastructure, developed automation solutions, or successfully migrated to Proxmox – the community benefits from your knowledge.

Possible topics for your submission:

- Proxmox VE & Storage: Best practices for Ceph, ZFS, and high availability.

- Automation & IaC: Proxmox management with tools like Ansible or Terraform.

- Backup Strategies: Use of Proxmox Backup Server in enterprise environments.

- Networking & Security: SDN implementations and security concepts.

- Migration: Strategies and case studies for switching from other hypervisors.

The submission deadline for the Call for Papers is July 31, 2026.

Event Overview

We have chosen the Hotel Palace St. George in Mönchengladbach as the venue. With its combination of classic architecture and modern amenities, the hotel provides the perfect setting for a focused conference and intensive exchange.

- When: September 24, 2026

- Where: Mönchengladbach (Hotel Palace St. George)

- What: A day full of expert presentations, networking, and community exchange around Proxmox.

Join us now

Take the opportunity to present your projects to an expert audience or to get early information about the event. All details about the Call for Papers and the venue can be found on the official event website.

We look forward to welcoming the Proxmox community to Mönchengladbach in September 2026!

👉 Click here to go directly to the CfP on www.proxday.de

LanguageTool: Powerful Language Checking on Your Own Network

LanguageTool is one of the leading open-source solutions for grammatical and stylistic text checking. While most users are likely familiar with the cloud-based version, the on-premise (self-hosted) variant is gaining increasing importance – especially for businesses, educational institutions, and organizations with high data protection and control requirements.

The core of LanguageTool is licensed under the GNU Lesser General Public License (LGPL-2.1). This license permits the free use, modification, and distribution of the software, even in commercial environments, provided that changes to the original code are also published under the LGPL. The license is “weak copyleft,” meaning that applications using LanguageTool as a library do not necessarily have to be open source. License information can be found in the official repository on GitHub in COPYING.txt. Third-party components such as dictionaries may be under different licenses (e.g., GPL).

There is an open-source version as well as an extended premium version with additional features such as improved style, semantics, and format checks. A detailed overview can be found on the website. It is important to note that for self-hosted instances, premium features are only available for commercial use and by individual quote. However, this is communicated with difficulty and primarily in the forum upon request. It also appears that not all premium features are available.

Unfortunately, LanguageTool made changes to the use of browser extensions in 2026: a premium subscription is now required for cloud usage. The self-hosted version remains unaffected – here, the browser extension can still be connected to your own server to enable seamless integration into web applications such as email, CMS, or forms.

Features

LanguageTool has a modular design and combines several technologies. These go far beyond the integrated spell checking of, for example, LibreOffice or Thunderbird. However, a much-desired feature is currently not yet available: support for multiple languages within a single document.

Morphological Analyzer & POS Tagger

First, the text is broken down into sentences and words. Each word receives at least one Part-of-Speech (POS) tag (e.g., noun, verb, adjective). The analyzer also considers inflectional forms, so “gegangen” (gone) is correctly identified as a past participle.Disambiguator

Many words have multiple meanings (e.g., “Bank” as a bench or a financial institution). The disambiguator uses contextual information to select the correct interpretation. This is done either rule-based or statistically and improves the accuracy of subsequent rule application.Rule Engine (XML & Java)

Error detection is based on a combination of:- XML Rules: Simple patterns like “dass instead of das” or “missing comma before weil.” These are easy to write and maintain.

- Java Rules: Complex, context-dependent rules that are programmatically implemented, e.g., for sentence structure or cross-text repetitions.

N-Gram Model (optional)

For improved detection of confusions (e.g., “ihre vs. ihre“), an n-gram model can be added. This uses statistical data from vast text corpora (e.g., Google Books) and compares the probability of word sequences. The n-gram data is not included in the standard package but can be downloaded locally.- User Dictionaries

Custom technical terms can be added to avoid false positives. This is done either via the API or by editing thespelling_custom.txt. - Markup Support

WithAnnotatedText, HTML, LaTeX, or XML can be processed without distorting position information. - Java API

For direct integration into Java applications,JLanguageTooloffers a powerful interface.

Integration

Integration is versatile: in addition to the browser extension, LanguageTool supports APIs for custom applications, plugins for LibreOffice, Microsoft Word, Thunderbird, and direct connection to development tools. The self-hosted solution thus offers maximum flexibility, security, and scalability – ideal for use in sensitive or regulated environments.

A complete list can be found in the following link. Notably absent is a dedicated plugin for the Outlook client. As far as could be ascertained, the effort was probably not justified by the demand. However, there are only older posts in the forum about this. Nevertheless, LanguageTool in the browser also works without problems with Outlook in the browser. The limitation should therefore only affect the desktop client.

Deployment

On Github, you will find various options for installing a self-hosted service. Especially for local installations, a Docker instance is probably the fastest to deploy.

Several images are linked here; the author chose one as an example.

The maintainer also offers various almost ready-to-use copy-paste solutions to start the service. This includes a Docker Compose template to start the service as an unprivileged user and keep the file system read-only:

To use this, the content must be written into, for example, a docker-compose.yml, the ‘ngrams’ and ‘fasttext’ directories created, and permissions adjusted for, for example, the ‘nobody’ user. All subsequent examples were performed on a Debian 13 system.

$ mkdir ~/Programme/Languagetool

$ cd ~/Programme/Languagetool

$ mkdir ngrams fasttext

$ chown nobody:nogroup ngrams fasttext

Below is the content of the compose-yaml with support for n-grams in German and English. It is important to note that the n-gram data is quite large and requires several GB of storage.

Currently, it is approximately 3 GB for German and 15 GB for English.

services:

languagetool:

image: meyay/languagetool:latest

container_name: languagetool

restart: unless-stopped

user: "65534:65534"

read_only: true

tmpfs:

- /tmp:exec

cap_drop:

- ALL

security_opt:

- no-new-privileges

ports:

- 8081:8081

environment:

download_ngrams_for_langs: de, en

volumes:

- ./ngrams:/ngrams

- ./fasttext:/fasttext

The service can then be started with the following command:

$ docker compose up -d

# Das Herunterladen der n-grams kann etwas dauern.

$ docker ps

2af60ed08544 meyay/languagetool:latest "/sbin/tini -g -e 14…" 4 weeks ago Up 3 hours (healthy) 0.0.0.0:8081->8081/tcp, :::8081->8081/tcp languagetool

The service is now available, and the plugins should be able to access it. There is no authentication or similar. Anyone with access to the URL and port can use it.

Conclusion

LanguageTool on-premise combines data protection-compliant text checking with flexible integration. The LGPL-2.1 license allows free use, while comprehensive interfaces enable seamless integration into office and web applications. With the correct configuration, a local server becomes a fully functional, enterprise-grade solution for linguistic checking.

Proxmox system requirements vary significantly depending on the deployment scenario. For a basic installation, you need at least a 64-bit CPU with virtualization support, 2 GB of RAM, and 32 GB of storage space. However, production environments require considerably more resources, depending on the number of virtual machines and their workloads. Correct hardware sizing determines the performance and stability of your Proxmox infrastructure.

(more…)

Mönchengladbach (DE) / Naarden (NL) — February 4th, 2026

Splendid Data and credativ GmbH announce strategic partnership to accelerate Oracle-to-PostgreSQL migration for enterprises.

Splendid Data and credativ GmbH today announced a strategic partnership to help organisations modernize complex Oracle database environments by migrating to native PostgreSQL in a predictable, scalable and future-proof manner.

The partnership addresses growing enterprise demand to reduce escalating database licensing costs while regaining autonomy, ensuring digital sovereignty and preparing data platforms for AI-driven use cases. PostgreSQL is increasingly selected as a strategic database foundation due to its open architecture, strong ecosystem and suitability for modern, data-intensive workloads.

Splendid Data contributes its Cortex automation platform, designed to industrialize large-scale Oracle-to-PostgreSQL migrations and reduce risk across complex database estates. credativ adds deep PostgreSQL expertise, enterprise-grade platform delivery and 24×7 operational support for mission-critical environments. Together, the partners also align on PostgresPURE, a production-grade, pure open-source PostgreSQL platform without proprietary extensions or vendor lock-in.

“Enterprises are making long-term decisions about licensing exposure, autonomy and AI readiness,” said Michel Schöpgens, CEO of Splendid Data. “This partnership combines migration automation with trusted PostgreSQL operations.”

David Brauner, Managing Director at credativ, added: “Together we enable customers to modernize faster while maintaining control, transparency and operational reliability.”

The initial geographic focus of the partnership is Germany, where credativ has a strong customer base. The model is designed to scale toward international enterprise customers over time. Joint activities will include coordinated go-to-market efforts, enablement of credativ teams on Cortex, and the delivery of initial pilot projects with shared customers.

Both companies view the partnership as a long-term collaboration aimed at setting a new standard for large-scale, open-source database modernization in Europe.

About credativ

credativ GmbH is an independent consulting and service company focusing on open source software. Following the spin-off from NetApp in spring 2025, credativ combines decades of community expertise with professional enterprise standards to make IT infrastructures secure, sovereign and future-proof, without losing the connection to its history of around 25 years of work in and with the open source community.

About Splendid Data

Splendid Data is a PostgreSQL specialist focused on large-scale Oracle-to-PostgreSQL migrations for enterprises with complex database estates. Its Cortex platform enables automated, repeatable migrations, while PostgresPURE provides a production-grade, open-source PostgreSQL platform without vendor lock-in.

Contact credativ:

Peter Dreuw, Head of Sales & Marketing (peter.dreuw@credativ.de)

Contact Splendid Data:

Michel Schöpgens, CEO (michel.schopgens@splendiddata.com)

proxmoxer is a Python library for interacting with the Proxmox REST API, whose compact implementation stands in contrast with the API’s large amount of endpoints.

The library’s implementation does not need to change when endpoints are added or amended in new Proxmox releases, but as a consequence, the few functions which handle those many endpoints have not been annotated in a way that resembles the returned data type of each specific endpoint.

proxmoxer-stubs provides external stub files and data containers for proxmoxer, which it generates from the API documentation’s specification for Proxmox versions 6 to 9.

Static type-checkers may then infer the types of a call chain like this:

typing.reveal_type(

proxmoxer.ProxmoxAPI().cluster.notifications.endpoints.smtp("argument").get()["mode"]

)Revealed type is "Literal['insecure'] | Literal['starttls'] | Literal['tls']"

Open source software differs from proprietary software primarily in the availability of the source code and the licensing. With open source, the source code is freely accessible and can be viewed, modified, and distributed by users. Proprietary software, on the other hand, is distributed with closed source code that belongs exclusively to the manufacturer. These fundamental differences influence costs, flexibility, support, and strategic decisions in companies. In this article, I would like to attempt a comparison of open source software vs. proprietary software.

This article does not constitute legal advice and only reflects the author’s personal assessment at the time of publication. For legal advice on licensing issues, please consult an attorney. Open source licenses determine how software may be used, modified, and distributed. The three main types of licenses are GPL (copyleft), MIT (permissive), and Apache (permissive with patent protection). The GPL requires that changes also remain freely available, while MIT and Apache offer more flexibility for commercial use. The right license choice depends on your business goals and legal requirements.

What are open source licenses and why are they important?

Open source licenses are legal agreements that define the terms under which software may be freely used, copied, modified, and distributed. They create legal certainty for developers and users by defining clear rules for dealing with the source code. Without these licenses, the use of third-party software would be legally problematic.

The legal significance of open source licenses is immense: they replace the standard copyright, which prohibits any use, with specific permissions. Companies need to understand these licenses, as violations can lead to costly litigation.

Open source licenses can be divided into two main categories:

- Copyleft licenses (such as the GPL): require changes to be published under the same license

- Permissive licenses (such as MIT, Apache): allow integration into proprietary software without publication obligation

This distinction significantly influences your business strategy and product development. Copyleft licenses promote community development but can restrict commercial models. In the context of copyleft licenses, one often speaks of “infectious” conditions, without negatively connoting this, but simply to point out that works derived from the use of copyleft code are also generally subject to the same license. Permissive licenses offer more flexibility for companies that want to develop proprietary solutions.

What is the difference between GPL, MIT, and Apache licenses?

The GPL license is a strict copyleft license that requires all changes and derivative works to also be under the GPL. MIT and Apache are permissive licenses that offer more freedoms, with Apache including additional patent protection. The choice between these licenses determines how you can use the software in your projects.

GPL (General Public License) protects the freedom of software through the copyleft principle. If you use and distribute GPL-licensed software, you must:

- provide or make available the source code

- also place your changes under the GPL

- clearly identify the license terms

The MIT license is the simplest permissive license. It allows virtually anything as long as you:

- retain the original copyright notice

- include the license terms in copies of the software

The Apache license is similar to the MIT license, but also offers:

- explicit patent protection for users

- protection against trademark infringement

- clearer rules for contributions to the software

Practical examples of use: Use the GPL for community projects that should remain open. The MIT license is suitable for libraries that are to be widely distributed. The Apache license is ideal for corporate projects where patent protection is important.

Which license should you choose for your project?

The right license choice depends on your business model, project goals, and desired community involvement. Choose the GPL for maximum openness, the MIT license for maximum distribution, and the Apache license for corporate projects with patent protection. Also consider the licenses of the software components you are already using.

For community-driven projects, the GPL is suitable because it ensures that all improvements benefit the community. This choice encourages contributions from other developers and prevents companies from using your work without compensation.

For libraries and tools, the MIT license is often the best choice. The low legal hurdle leads to higher adoption and more feedback. Many successful JavaScript libraries use the MIT license for this reason.

For enterprise software, the Apache license offers the best balance between openness and legal certainty. Patent protection prevents legal problems and makes the project more attractive to other companies.

Important decision factors:

- Do you want to allow commercial use without an obligation to return?

- Is patent protection relevant to your project?

- What licenses do your dependencies use?

- How important is maximum distribution compared to community control?

Which licenses can be combined with each other?

MIT and Apache-2.0 are permissive licenses and can generally be combined with many other licenses without any problems. Code under MIT can be integrated into GPLv2 or GPLv3. Apache-2.0 is compatible with GPLv3, but not with GPLv2, as GPLv2 does not accept the Apache-2.0 patent clause. GPL licenses are copyleft, meaning that as soon as GPL code is combined, the entirety must be distributed under GPL. GPLv2 and GPLv3 are not mutually compatible, unless a project uses the “v2 or later” option.

Crosstab: Compatibility / Combinability

| Combination | MIT → other | Apache‑2.0 → other | GPLv2 → other | GPLv3 → other |

|---|---|---|---|---|

| MIT | ✔️ | ✔️ | ✔️ | ✔️ |

| Apache‑2.0 | ✔️ | ✔️ | ❌ not with GPLv2 | ✔️ compatible |

| GPLv2 | ✔️ | ❌ | ✔️ under GPLv2 | ❌ except “v2 or later” |

| GPLv3 | ✔️ | ✔️ | ❌ except “v2 or later” | ✔️ |

Overview

Here is a summary of the three major open-source license models:

| Function | MIT | Apache 2.0 | GPL (v2/v3) |

|---|---|---|---|

| License type | Permissive | Permissive | Strong Copyleft |

| Derived works | Can be closed source | Can be closed source | Must be open source |

| Patent protection | No explicit clause | Yes (grant/retaliation) | Yes (v3 only) |

| Attribution | Note required | Note required | Note required |

| Primary goal | Maximum flexibility | Legal certainty | Protection of the ecosystem |

GPL is not GPL

GPL Version 2 and GPL Version 3 both pursue the goal of ensuring software freedom, but differ in several key aspects. GPLv3, released in 2007, addresses technical and legal developments that were not yet considered in GPLv2 from 1991. An important difference is the handling of Tivoization: Manufacturers provide the source code, but technically prevent users from running modified versions. A classic example is a digital video recorder (such as the namesake TiVo) that uses GPL software but only accepts firmware signed by the manufacturer. Users can view and modify the code, but cannot install their changes on the device – a clear contradiction to the spirit of the GPL. The GPLv3 explicitly prevents this.

In addition, GPLv3 strengthens protection against software patents, improves license compatibility, and takes greater account of international legal spaces. Overall, it expands the freedom of use, while GPLv2 is considered more stable but less comprehensive.

Special cases

The AGPL dilemma: Protection vs. ecosystem

Switching to the Affero GNU Public License (AGPL) or even to specific “Source Available” licenses – as can be observed in prominent examples such as MongoDB (SSPL) or Elasticsearch (ELv2) – seems tempting at first glance in order to protect one’s own business model against commercial use by large cloud providers. In practice, however, this path often proves risky: such licenses frequently lead to legal uncertainty for corporate customers, a fragmentation of the developer community, and the loss of official “Open Source” status according to the OSI definition. Instead of sustainably protecting the project, there is a risk of undermining precisely the collaborative dynamics and trust that made the software’s original success possible in the first place. In fact, we are seeing a blanket rejection of such licenses, especially among large companies. In particular, the AGPL is very often found on a blacklist.

LGPL – Lesser GPL

The Lesser General Public License (LGPL) was also developed by the Free Software Foundation (FSF). The LGPL allows developers or companies to incorporate software under LGPL into their own projects without being forced to disclose their source code as a whole due to a so-called strong copyleft. However, end users must be able to change the LGPL-licensed code, which is why this code in proprietary software is usually outsourced to dynamic libraries that can also be replaced when the program as a whole is only available in binary code. The license represents a compromise between the various strict copyleft licenses such as GPLv2 or v3 on the one hand and permissive licenses such as MIT or BSD licenses on the other.

Does an open-source license contradict every business model?

The clear answer is “No!” On the one hand, licenses such as MIT also enable the distribution of software without disclosing the source code, and on the other hand, thousands of projects show that a business model can also be mapped with open source code. A typical example today is SAAS offerings, which are managed by a commercial arm of the development team. The development takes place completely open source, but a company can still offer the software as a hosted service, for example. A very well-known example would be the blog software WordPress, which is available for free at https://wordpress.org/ for download – at the same time https://wordpress.com/ offers hosting and paid add-ons, cloud services, backup space, etc. There are also some projects that are distributed as a dual license, where the open software is available under a real free license, but there is also a commercial license that companies can purchase including guarantees, etc. Less popular in the communities are open-core models, where there is a community edition under an open-source license and, in parallel, a “full version” that must then be purchased and is not open source. However, these models often lead to the fact that there is only a small community that voluntarily contributes to further development. In addition to these models, there is also the possibility of selling services related to open source, as we do at credativ, for example. We advise customers for a fee on how they can implement their infrastructure with open source and support them in operation. Nevertheless, we practically always rely on pure open-source software in this context.

But isn’t open-source software always free?

No, open-source licenses do not prevent the commercial distribution of software. However, they sometimes force the source code to be published, which may promote competitive products, but it is not necessarily free. Richard Stallman once formulated this as “Free as in free speech, not free beer.” In addition, a TCO analysis always includes costs for conception, rollout, and operation. These are just as present with proprietary software. However, open source offers the advantage that you get a high degree of independence with the source code, which means that you are not in a vendor lock-in trap if the provider massively increases prices.

What about software without license information?

Software without explicit license information is legally fully protected in most legal systems (“all rights reserved”). This means that users may neither use, copy, modify nor pass on the code, even if it is publicly accessible, for example on GitHub. An explicit license is therefore always necessary for permitted use.

Anyone who consciously wants to make software public domain can do this via CC0 (Creative Commons Zero). CC0 waives copyright claims – as far as legally possible – and enables almost unrestricted use without conditions.

It is important to distinguish between Open Source / FOSS and Public Domain: Open Source does not mean “free of rights”, but describes licensed software that grants certain freedoms of use (e.g. MIT, Apache, GPL). The Public Domain, on the other hand, is actually free of copyrights, either through the expiry of the protection period or through explicit waiver as with CC0.

What happens if you misuse open source licenses?

License violations can lead to costly litigation, claims for damages, and the obligation to publish your own source code. Common mistakes include ignoring copyleft provisions, missing license notices, and using incompatible licenses in a project. Preventive measures such as license audits protect against legal problems. There are also specialized service providers and software offerings that take over the analysis of the code used.

Legal consequences of license violations are diverse:

- Cease and desist orders that can stop your software distribution

- Claims for damages for lost license fees

- Obligation to subsequently publish the source code

- Attorney and court costs

Common mistakes in practice arise from a lack of awareness:

- GPL software in proprietary products without source code publication

- Removal or modification of copyright notices

- Mixing incompatible licenses without considering the implications

- lack of documentation of used open source components

Companies can protect themselves by conducting regular license audits, training developers, and using tools for automatic license detection. A clear guideline for the use of open source software helps to avoid problems from the outset.

Various software tools are also available for license audits, some of which are offered commercially or as SAAS. But of course, there are also various open-source tools that support you in the license audit. As an example, I would like to mention the following here:

- FOSSology – an open-source license compliance system that already supports code scanners during the development stage to avoid introducing unwanted license combinations.

- AboutCode ScanCode describes itself as an industry-leading SCA code scanner and can also be integrated into build pipelines for automatic code scanning.

license checkeris particularly exciting for web or web-related developers, as it fits seamlessly into the npm / npx universe.

Of course, the list does not claim to be complete and, as always, can change again and again in the open-source environment.

How credativ® helps with open source licensing issues

We support companies in the practical use of open source software through comprehensive consulting and practical implementation assistance. Our team of Linux specialists and open source experts knows many pitfalls and helps you avoid them while making the most of the benefits of free software.

With over 20 years of experience in the open source field, we understand both the technical and legal challenges. We help you to use open source software safely and effectively in your IT infrastructure without taking legal risks. Our comprehensive services cover all aspects of open source consulting.

Contact us for a non-binding consultation on your open source deployment. Together, we will develop a strategy that harnesses the innovative power of open source software for your company.